Demand for edge data centers has started to increase from low levels. Uptime Institute’s research shows that owners, operators, and suppliers alike anticipate further growth across different industry verticals, especially in North America.

While providers sometimes deploy edge data centers based on speculative future demand, it is ultimately the needs of workloads that will define and drive the edge buildout. At the highest level, three primary technical factors determine whether data processing and/or storage should be at the edge or a more central location:

- Latency, or the speed of workload responsiveness/service delivery.

- Volume of data created or needed locally, which affects data haulage costs.

- Criticality — that is, the business sensitivity to service failure or delays and/or the security or compliance requirements of a workload.

Mapping the workloads

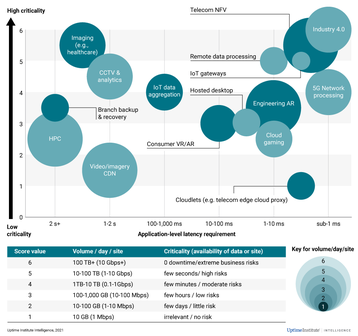

In Figure 1, Uptime has mapped select edge workloads according to their typical requirements for criticality (y-axis) and latency (x-axis). The relative volume of data these workloads generate at an individual edge data center site is reflected in the relative size of each bubble.

The latencies shown in Figure 1 are based on “loaded networks” and consider expected delays, such as those caused by multiple communication round trips needed to complete a workload operation, as well as network congestion resulting from large data sets (high data volumes).

Internet of things (IoT) stands out as the workload in our sample that is most suited for edge data centers. IoT generates relatively little data but typically has high criticality requirements. IoT also often requires millisecond-range latency at the IoT gateway level, to handle storage and compute close to sensors and devices.

A few edge workloads can be considered highly demanding, because they require sub-1 millisecond latency, have high criticality, and typically have relatively large data volumes. They include industry 4.0 (the practice of tightly integrating information and communication technology in industrial production), telecom network function virtualization (NFV), and 5G network processing. Two other notable workloads that can have low-latency requirements are cloud gaming and cloudlets (instances of cloud computing at the edge). Augmented reality (AR) and virtual reality (VR) applications, like cloud gaming, depend on low latency to achieve an attractive user experience, making them strong candidates for edge data centers.

Some edge use cases, such as video streaming, make use of edge data centers mainly to overcome network cost and/or bandwidth constraints (device-level data buffering overcomes any latency issues when these workloads are sited in far-away facilities). High-definition closed-circuit television (CCTV) security cameras generate large data volumes and commonly use analytics to make local decisions (in a nearby edge data center) and to reduce data volumes from the edge to a core data center. Imaging, including from equipment used for analysis on-site at medical facilities, is another edge use case because of its high criticality and the requirement to handle large data volumes with a reliable latency of 1-2 seconds.

Other workloads in different industry verticals will benefit from edge data centers to improve or add new features, reduce network costs, and/or reduce bandwidth constraints.

In the short term, edge data center market growth is likely to be the result of a combination of factors, including the demand profile of various edge applications and the extent of the speculative buildout of supporting networks and shared edge data centers. In the long run, however, the continued demand for edge data centers will likely be defined and driven by workload needs.