Liquid cooling is a wave that never seems to quite break. It’s always going to be a big thing, sometime in the future. There’s not a huge take-up of the technology, but there’s always plenty of news to report.

Fundamentally, the technology is great because it removes heat passively, with very little energy input, and the waste heat emerges in a highly concentrated and therefore useable form: water at more the 45ºC can be used to heat buildings or warm greenhouses to grow plants.

Plenty of options

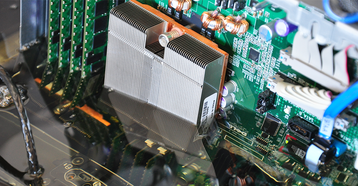

That’s a generalization of course, because there are numerous kinds of liquid cooling, from low-impact systems that provide extra cooling to hot racks by running water through the doors, to systems which use more or less radical redesigns that immerse electronics in coolant, to futuristic scenarios running a two-phase coolant through capillaries directly on the surface of hot chips.

The inventions keep coming, and there’s a market for this, but it’s mostly for high-performance computing (HPC), not a market to be ignored, but not the giant opportunities of enterprisero webscale computing.

Those giant markets obstinately keep cooling with air. They pack in more chips, but the chips get more efficient. So the power density (and therefore the heat to be removed) never gets to the point where it’s economically necessary to turn on the liquid cooling taps.

HPC uses liquid cooling but webscale and the enterprise obstinately keep cooling with air

And fundamentally, not every data center can actually deliver enough power per square metre to actually make it necessary to cool with liquid.

Still, there’s a couple of interesting things happening here. Green Revolution Cooling has been bubbling along with a solution that immersed racks in tanks of fluid. Now the company has a range of servers that could appeal to a more general market. It’s based on concepts similar to the Open Compute Project, and offers servers which are customizable.

And Lenovo (the part that looks after IBM’s old x86 server range) is selling some liquid cooling for HPC in the University of Birmingham. This one is interesting because it uses water, and the University asked for it specifically to allow it to expand an iDataPlex installation which was pushing the limits of the power density the University could handle.

In other words, while GRC is starting to make kit that looks a lot more like a product people can order and buy, users like University of Birmingham are starting to pull liquid cooling into use, in order to handle specific needs for energy density.

UK Universities have been getting serious about liquid cooling for some time, and there’s a body of experience growing there. A couple of previous ones, in Leeds and Derby, have used the Iceotope Petagen system - one which uses coolant on the servers, and a secondary water circuit. The Derby installation was an actual sale rather than a test of a prototype.

Mellanox and supercomputing specialist OCF are the partners in the Birmingham project, and that’s worth noting. Mellanox makes switches, and it’s already working with Iceotope on liquid cooled models to handle the removal of heat from the network part of the data center.

Every additional piece of product and user news makes the idea of cooling by water or coolant more credible. If I’m not mixing my metaphors, I’d say we’re seeing solid progress on liquid cooling.

A version of this story appeared on Green Data Center News