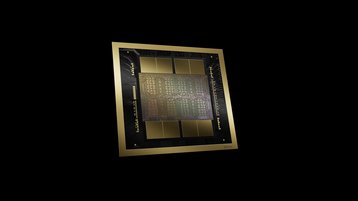

Nvidia has unveiled its next GPU family - the Blackwell.

The company claimed that the new GPU architecture would allow customers to build and run real-time generative AI on trillion-parameter large language models at 25x less cost and energy consumption than its predecessor, the Hopper series.

Amazon, Google, Meta, Microsoft, Oracle Cloud, and OpenAI are among the companies that confirmed they will deploy Blackwell GPUs later this year.

Blackwell is named in honor of David Harold Blackwell, a mathematician who specialized in game theory and statistics, and the first Black scholar inducted into the National Academy of Sciences.

“For three decades we’ve pursued accelerated computing, with the goal of enabling transformative breakthroughs like deep learning and AI,” said Jensen Huang, founder and CEO of Nvidia.

“Generative AI is the defining technology of our time. Blackwell GPUs are the engine to power this new industrial revolution. Working with the most dynamic companies in the world, we will realize the promise of AI for every industry."

Blackwell architecture GPUs are manufactured using a custom-built, two-reticle limit 4NP TSMC process with GPU dies connected by 10TBps chip-to-chip link into a single, unified GPU.

The GPU has 208 billion transistors, an increase on the 80bn in the Hopper series. It is twice the size of the Hopper.

The Blackwell includes a second-generation transformer engine and new 4-bit floating point AI inference capabilities.

It also features a new dedicated engine for reliability, availability, and serviceability, with AI-based preventative maintenance to run diagnostics and forecast reliability issues.

Blackwell has a dedicated decompression engine to accelerate database queries, and undisclosed 'advanced confidential computing capabilities.'

As with the Hopper series, Blackwell will be available as a 'Superchip'—two B200 GPUs and an Nvidia Grace CPU over a 900GBps chip-to-chip link.

The GB200 Superchip provides up to a 30x performance increase compared to the Nvidia H100 GPU for LLM inference workloads, and reduces cost and energy consumption by up to 25x, Nvidia said. It did not compare it to a GH200 Superchip.

“Blackwell offers massive performance leaps, and will accelerate our ability to deliver leading-edge models. We’re excited to continue working with Nvidia to enhance AI compute," Sam Altman, CEO of OpenAI, said.

Elon Musk, CEO of Tesla and xAI, added: “There is currently nothing better than Nvidia hardware for AI.”

According to Nvidia, the B100, B200, and GB200 operate between 700W and 1,200W, depending on the SKU and type of cooling used.