Microsoft is deploying the equivalent of five 561 petaflops Eagle supercomputers every single month, Azure CTO Mark Russinovich claimed.

Eagle was first revealed in the Top500 ranking of the world's most powerful supercomputers last November, coming in third. With a performance of 561.2 petaflops (HP Linpack), the Microsoft system is also the world's most powerful cloud supercomputer.

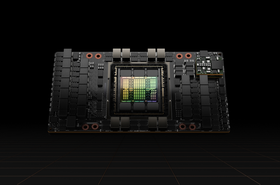

"We secured that place [in the Top500] with 14,400 networked Nvidia H100 GPUs and 561 petaflops of compute, which at the time represented just a fraction of the ultimate scale of that supercomputer," Russinovich said at Microsoft Build.

"Our AI system is now orders of magnitude bigger and changing every day and every hour," he added - although Eagle had the same performance in this month's Top500 ranking, suggesting Russinovich was talking about Azure's compute capacity more broadly.

"Today, just six months later, we're deploying the equivalent of five of those supercomputers every single month."

That would represent the equivalent of 72,000 H100 GPUs every month, spread out across the company's growing data center footprint, and a total of 2.8 exaflops of compute.

"Our high speed and InfiniBand cabling that connects our GPUs would be long enough to wrap around the Earth at least five times," he added. The world's circumference is around 40,000km, meaning more than 200,000km of cabling.

Elsewhere at Build, Microsoft CTO Kevin Scott gave more insight into the company's upcoming supercomputers - but was far less specific about numbers.

"I just wanted to - without mentioning numbers, which is hard to do - give you all an idea of this scaling of these systems," Scott said. "In 2020, we built our first AI supercomputer for OpenAI. It's the supercomputing environment that built GPT-3."

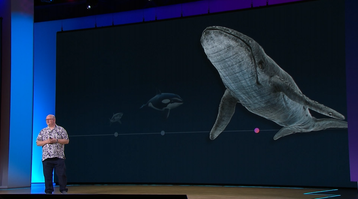

In lieu of numbers, Scott used marine wildlife as a representation of scale. That system, he said, could be seen as a shark, at least in size.

"The next system that we built, scale-wise is about as big as an orca. That is the system that we delivered in 2022 that trained GPT-4," he continued, likely referencing the Eagle system. While his metric is far from exact, orcas are twice as long and three times as heavy as great white sharks.

"The system that we just deployed is about as big, scale-wise, as a whale." Stretching the metric, a blue whale (the whale he pictured) is more than twice as long as an orca and some 25 times heavier.

Scott also said that model development showed that there was still a clear link between the amount of compute used to develop new models and the capabilities they are capable of. "And it turns out that you can build a whole lot of AI with a whale-sized supercomputer," he said.

"So one of the things that I want people to really, really be thinking clearly about is the next sample is coming, and this whale-sized supercomputer is hard at work right now building the next set of capabilities that we're going to put into your hands."

Earlier this year, it was reported that Microsoft and OpenAI were considering building a $100 billion 'Stargate' supercomputer, but Scott last month called supercomputing speculation "amusingly wrong."

More in The Compute, Storage & Networking Channel

-

-

-

Episode The future of AI and HPC