Tesla and SpaceX CEO Elon Musk said that Grok, the AI chatbot from his xAI startup, requires tens of thousands of GPUs to train.

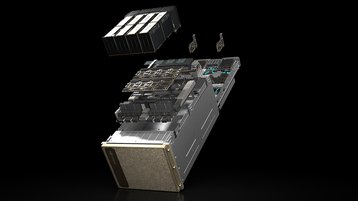

In an interview with Norway wealth fund CEO Nicolai Tangen on Twitter/X spaces, which was beset with multiple crashes and glitches, Musk said training the Grok 2 model takes about 20,000 Nvidia H100 GPUs.

The X and xAI CEO said that training the Grok 3 model and beyond will require 100,000 Nvidia H100s. The Wall Street Journal reports that xAI is currently looking to raise $3 billion on an $18bn valuation.

xAI (which is separate from the social media platform X, but has embedded its AI into X) uses Oracle for a large proportion of its training needs. “We got enough Nvidia GPUs for Elon Musk's company xAI to bring up the first version, the first available version of their large language model called Grok," Oracle's Larry Ellison said last month.

"They got that up and running. But, boy, did they want a lot more. Boy, did they want a lot more GPUs than we gave them.”

In the interview, Musk said that the chip shortages were the biggest constraint for the development of AI so far, but added that electricity supply challenges would be the limiter over the next couple of years.

In the same call, the exec claimed that artificial general intelligence would be achieved within two years. Grok was integrated into X this month to summarize news - it has since pushed erroneous stories about Iran attacking Israel, the Sun disappearing, and New York's mayor ordering police to shoot earthquakes.