New research suggests that AI use in data centers could use as much electricity as a small country such as the Netherlands or Sweden by 2027.

Annual AI-related electricity consumption around the world could increase by 85.4–134.0 TWh before 2027, according to peer-reviewed research produced by researcher Alex de Vries, published by Digiconomist in the journal Joule. This represents around half a percent of worldwide electricity consumption, and a substantial growth in data center electricity use, which is currently estimated at between one and two percent of the world’s electricity usage.

Read DCD's analysis: Generative AI and the Future of Data Centers

The result echoes an estimate given by Jon Summers and Tor Bjorn Minde of the Swedish research institute RISE at DCD>Connect London last week, that AI could consume as much electricity as Sweden.

1.5 million Nvidia DGX chips

“In recent years, data center electricity consumption has accounted for a relatively stable one percent of global electricity use, excluding cryptocurrency mining,” says de Vries in the paper, The Growing Energy Footprint of Artificial Intelligence. “Between 2010 and 2018, global data center electricity consumption may have increased by only six percent. There is increasing apprehension that the computational resources necessary to develop and maintain AI models and applications could cause a surge in data centers' contribution to global electricity consumption.”

A Ph.D. candidate at Vrije Universiteit Amsterdam, de Vries derived the figures in the paper by looking at the annual production of the Nvidia DGX chips which are used in 95 percent of big AI applications. Although there are currently bottlenecks in the supply of these chips, de Vries expects these to be resolved soon, unleashing a flood that could effectively add 50 percent to the world’s data center energy use.

“AI could be responsible for as much electricity consumption as Bitcoin is today in just a few years’ time,” says de Vries, who founded Digiconomist nine years ago to examine “the unintended consequences of digital trends,” focusing on the energy use of Bitcoin.

de Vries discussed Bitcoin energy use in a DCD Podcast in 2022, and Digiconomist currently estimates that Bitcoin consumes 126TWh of electricity per year, roughly equivalent to the Arab Emirates.

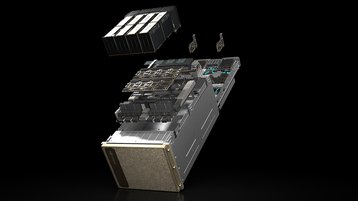

The paper starts from the energy consumption of a single Nvidia DGX A100 server, which can consume from 3kW to 6.5kW (as much as several US households combined), while the H100 version of the chip can consume more than 10kW across an entire server with multiple chips.

Nvidia is producing around 100,000 of these chips in 2023, but could be rolling out 1.5 million a year by 2027, says de Vries, thanks to an agreement with chipmaker TSMC. The electricity consumption of one year’s production of these systems would consume around 85 to 134TWh of electricity, he says.

The figure is approximate - not all these units will be used at full capacity. However, it doesn’t include the installed base that will be operational at that time, or the other energy use associated with those chips including cooling systems, transport, manufacture, and other Scope 3 emissions.

“While the supply chain of AI servers is facing some bottlenecks in the immediate future that will hold back AI-related electricity consumption, it may not take long before these bottlenecks are resolved,” says de Vries. “By 2027 worldwide AI-related electricity consumption could increase by 85.4 to 134.0TWh of annual electricity consumption from newly manufactured servers. This figure is comparable to the annual electricity consumption of countries such as the Netherlands, Argentina, and Sweden.”

While most attention has focused on the energy used in training AI systems, de Vries warns that the application or “inference” phase of their use could be equally energy-hungry. Speaking at DCD>Connect last week, Jon Summers and Tor Bjorn Minde of RISE made a similar observation, in a talk that examined how air cooling cannot deliver the heat removal needed by such dense power consumption.

Summers and Minde observed that, in an application like Google Search, an AI might be used so often that the energy used in the low-power inference phase multiplies up to be as big as that used in training.

de Vries observes that applying AI to Google’s search could, in a worst-case scenario, boost its energy use to around that of Ireland, or 29TWh per year, but notes that the sheer cost of doing this could discourage Google from implementing it.

He calls on the industry to be “mindful about the use of AI,” issuing the following warning: “Emerging technologies such as AI and previously blockchain are accompanied by a lot of hype and fear of missing out. This often leads to the creation of applications that yield little to no benefit to the end-users.

"However, with AI being an energy-intensive technology, this can also result in a significant amount of wasted resources. A big part of this waste can be mitigated by taking a step back and attempting to build solutions that provide the best fit with the needs of the end-users (and avoid forcing the use of a specific technology).”

He concludes: “AI will not be a miracle cure for everything as it ultimately has various limitations. These limitations include factors such as hallucinations, discriminatory effects, and privacy concerns. Environmental sustainability now represents another addition to this list of concerns.”

The paper is available free for 50 days, and on request from the author.