Talk to anyone about their data center right now, and the subject will inevitably shift to artificial intelligence – AI. Everyone has a take. Everyone has a product. But “AI” is a big umbrella term and sometimes it can feel like brands are ‘AI-washing’ without a coherent understanding of what exactly it is, and whether the use cases being employed are effective.

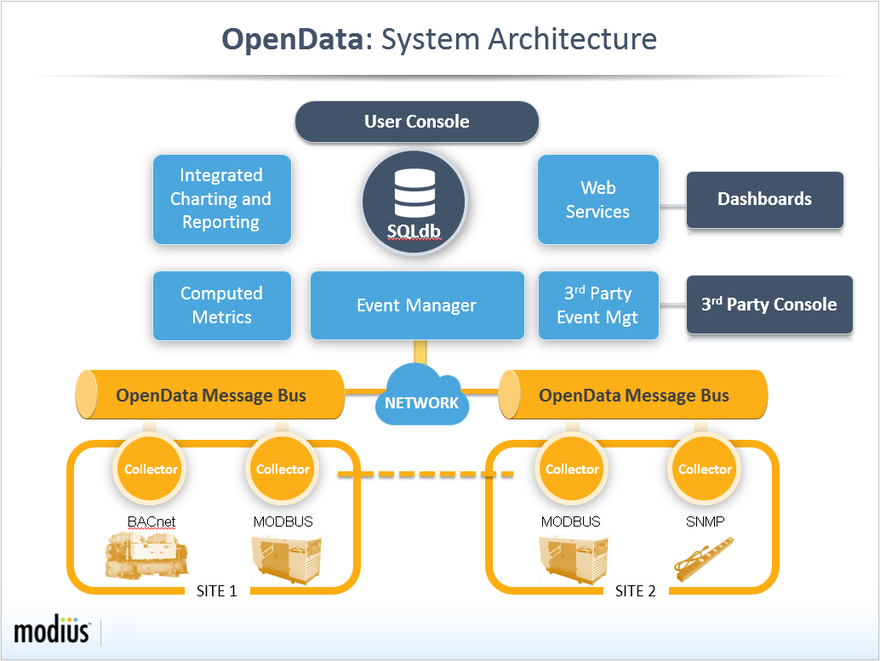

Modius, on the other hand, is a different kind of company, offering OpenData®, a full-featured DCIM (Data Center Infrastructure Management) system to monitor every component in the facility and offer suggestions about the parts that require replacement or optimization, through machine learning (ML), a distinct type of AI capable of self-improving optimization and predicting future events based on past patterns, all from just 45 - 90 days of initial training data for the model.

DCD spoke to Craig Compiano, President, CEO, and founder of Modius about the product, and its wider place in an AI-powered future. He begins by explaining just what an ML-powered DCIM can do for your data center.

“Analysis of data at scale is very hard for humans – things like detecting anomalies in system performance or equipment performance. To see those correlations in near real-time, the best available technology today before AI and ML was thresholds, indicative of some variation in predefined behavior. However, the equipment being monitored could be performing incorrectly, but within the threshold range, and the legacy “threshold paradigm” would not detect the problem”

Machine learning can see things in seconds that humans simply could not without hours of poring over and cross-referencing data points:

“That anomaly could be a precursor of equipment failure or sub-optimization. That behavior would not be detectable by a human. Machine learning can “learn” what normal behavior looks like, and then as equipment deviates, the AI algorithms can detect that behavior change and notify the operator.”

As well as alerting to problems, ML can be used as a tool to optimize the data center:

“It can also be used as an optimization goal, like reducing cooling energy consumption and optimizing against target variables. Humans are less able to adapt to all the data that's coming from the equipment and the machine learning algorithms can indicate, for example, set point changes based on time-of-day usage that otherwise would not be obvious to the operator.”

Predictive maintenance is another area where ML can shine – not in place of a human but working alongside the skills and experience of human operators.

“A trained experienced human has their ways of analyzing the behavior of equipment and that comes with years and years of experience. In today's labor market shortfall, it becomes even harder to find a new worker who has all those years of experience and can use intuition to diagnose equipment performance issues. Instead, operators have to rely either on predefined rules that they can follow, or now, by leveraging the technology of AI to help them make those decisions.”

Retaining control

Don’t be fooled, however. OpenData isn’t going to run your data center for you. Control remains fully vested in the human workforce. Instead, ML-driven advice, suggestions, and warnings complement the professional knowledge of the team, as Compiano explains:

“The models we've developed and are bringing to market do not provide control. They make recommendations because we don't think the market, or the state of the technology, is ready for wide-scale adoption of control functions. Eighty percent of the value of ML is going to come from identifying and recommending how operators make the decisions. The last 20 percent of optimization may come from an automated deployment – but we feel the market will be much more reluctant to turn over general operations, and the use cases are much broader if we don't try to get into controls. We've remained true to our roots as an analytics platform. We want to analyze.”

So then, why now?

“Why now? Because it helps address the staffing issues. If you're going to build procedures, train models, and develop expertise, we want to capture the knowledge of the retiring workforce before they retire. That puts a sense of urgency in my mind to adopt these technologies as quickly as they are affordable for the operator.”

Compiano’s belief that the time for automated control of the data center has not yet come is a vital tenant of OpenData’s offering: “Depending on the type of data center, you could get ‘lights out’ or remote monitoring capability, but some data centers such as third-party Colo spaces, have SLAs and so require somebody to be there. Either security people who are cross-trained to do rounds and readings, or just your typical operator for safety reasons and fallback. I don't think we are ever going to eliminate the operator in all cases, but you could have a reduction in the skill sets of the people on-premise.”

Upskilling the workforce

But, we wonder, what are all these people going to do, when so much of the monitoring becomes automatic?

“People will go upstream in their capabilities or skill sets. You might need fewer people, but the people that you have will tend to be closer to the complexities of the equipment, and then leverage that knowledge into the algorithms to help either set parameters or configuration. The approach we’ve taken is to leverage operator’s subject matter expertise to shape the model, and then let the algorithms do the heavy lifting.

In other words, it’s not so much about taking jobs away, it’s about evolving them.

“Today, we look to someone who's been in the business for 25 years and cross-train them on how you shape the algorithm by applying their knowledge of the space in the future. Those people who have the expertise will be cross-trained to know more about machine learning and how the models work because you have to, like with any tool – you have to understand how it works to leverage the value.”

But that doesn’t automatically mean that every engineer will now have to be a computer scientist. However, it does present the question of whether some of the industry’s more seasoned engineers will be willing to trust an amorphous recommendation from a deus ex machina. Trust will need to be built and that comes with time, and proof:

“It's very difficult to understand how the model does its job. It's better to think about it as a black box – if I put data into the black box and I get out a result that makes sense, and I can then verify that through observation, I will gain confidence in the model. As long as a model is not making changes. I don't have to have a high degree of confidence. I can just observe that the model is generally correct, and I'll be comfortable putting more value and reliance on the model. So, it's a recursive process.”

Human instinct matters

Meanwhile, just as an airline pilot regularly lands a plane manually when the computers could do it all, so too, the data center custodian is on hand to use their human instinct, whilst simultaneously learning to trust the black box:

“If it's an anomaly-like algorithm, it's going to detect where there is an anomaly, and then point to the likely root cause. The operator would examine the piece of equipment identified and if they find a problem, they're going to fix it. The more the model succeeds, the more confidence there will be in the model, remembering, we're not dealing with control. That's a key point to remember because when you exercise control, the bridge between theory and practice is very long, especially when you're in a highly risk-averse environment.”

This is another reason Compiano believes in analysis and recommendation, but not control, which is in his view best left to the humans, aided by the DCIM.

“The big box data centers will always have people there. Somebody has to go in there and move servers around unless you go way off the edge and start having robots do that for you. But I can't stress too much that there's a lot of value to be achieved with models that make recommendations. So, why don't we just focus on what's easy, repeatable, and scalable and not get too hung up about the potential risk of doing control?

Consideration of adopting ML is not just something for new builds. Existing data centers will also need to look at the potential and cost-benefit of adopting AI solutions when it comes to upgrading and consolidation: “If you're buying DCIM today, make sure that it will support your needs tomorrow. The vendor must have something on its roadmap that gives the customer confidence that when they are ready to deploy machine learning – because everybody will at some point – there is some form of AI/ML that the DCIM tool can support. Otherwise, the customer is going to end up with a second product that they're trying to operate concurrently, which is not the answer.”

Responsible machine learning

We ask what Compiano thinks the ML-enabled future looks like. He reminds us that this is not going to be an overnight transformation. He tells us:

“The journey today to wide adoption of machine learning is a three to five-year journey. That could include the adoption of new and emerging technologies like liquid cooling, change in data center configuration and higher power densities, or maybe less redundant infrastructure because of the compute loads that don't need high redundancy.”

Before reminding us of the slow-and-steady mantra of introducing ML in a responsible, measured way, rather than going all-in too soon.

“The immediate future is the full-scale deployment of DCIM as a fully integrated platform. Many operators are struggling with legacy DCIM products, or multiple solutions that are not integrated. They're still struggling with the cost and effort to bring their DCIM into a fully integrated contemporary enterprise architecture. After you get to the moon, let's talk about going to the next planet.”

Compiano advises us that Modius is shipping the OpenData AI/ML capability today and continues to add features and capabilities to the technology. You can reach them at [email protected] or +1-(888) 323.0066.

More in AI & Analytics

-

-

Sponsored The unbreakable data center

-