Like the superpositioned quantum bits, or qubits they contain, quantum computers are in two states simultaneously. They are both ready, and not ready, for the data center.

On the one hand, most quantum computer manufacturers have at least one system that customers can access through a cloud portal from something that at least resembles IT white space. Some are even selling their first systems for customers to host on-premise, while at least one company is in the process of deploying systems into retail colocation facilities.

But these companies are still figuring out what putting such a machine into production space really means. Some companies claim to be able to put low-qubit systems into rack-ready form factors, but as we scale, quantum machines are likely to grow bigger, with multiple on-site systems networked together.

Different technologies require disparate supporting infrastructure and have their own foibles around sensitivity. As we tentatively approach the era of ‘quantum supremacy’ – where quantum computers can outperform traditional silicon-based computing at specific workloads – questions remain around what the physical footprint for this new age of compute will actually look like and how quantum computers will fit into data centers today and in the future.

The current quantum data center footprint

Quantum computers are here and available today. They vary in size and form factor depending on which type of quantum technology is used and how many qubits – the quantum version of binary bits – a system is able to operate with.

While today’s quantum systems are currently unable to outperform “classical” silicon-based computing systems, many startups in the space are predicting the day may come soon when quantum processing units (QPUs) find use cases where they can be useful.

As with traditional IT, there are multiple ways to access and use quantum computers. Most of the major quantum computing providers are pursuing a three-pronged strategy of offering on-premise systems to customers, cloud- or dedicated-access systems hosted at quantum companies’ own facilities, or cloud access via a public cloud provider.

AWS offers access to a number of third-party quantum computers through its Braket service launched in 2019, and the likes of Microsoft and Google have similar offerings.

As far as DCD understands, all of the third-party quantum systems offered on public clouds are still hosted within the quantum computing company’s facilities, and served through the provider’s networks, via APIs. None that we know of is at a cloud provider’s data centers.

The cloud companies are also developing their own systems, with Amazon’s efforts based out of the AWS Center for Quantum Computing, opened in 2019 near the Caltech campus in Southern California.

IBM offers access to its fleet of quantum computers through a portal, with the majority of its systems housed at an IBM data center in New York. A second facility is being developed in Germany.

Big Blue has also delivered a number of on-premise quantum computers to customers, including US healthcare provider Cleveland Clinic’s HQ in Cleveland, Ohio, and the Fraunhofer Society’s facility outside Stuttgart, Germany. Several other dedicated systems are set to be hosted at IBM facilities in Japan and Canada for specific customers.

Many of the smaller quantum companies also operate data center white space in their labs as they look to build out their offerings.

NYSE-listed IonQ currently operates a manufacturing site and data center in College Park, Maryland, and is developing a second location outside Seattle in Washington. It offers access to its eight operational systems via its own cloud as well as via public cloud providers, and has signed deals to deliver on-premise systems.

Nasdaq-listed Rigetti operates facilities in Berkeley and Fremont, California. In Berkeley, the company operates around 4,000 sq ft of data center space, hosting 10 dilution refrigerators and racks of traditional compute; six more fridges are located at the QPU fab in Fremont for testing. The company currently has the 80-qubit Aspen M3 online, deployed in 2022 and available through public clouds. The 84-qubit Ankaa-1 was launched earlier this year but was taken temporarily offline, while Ankaa-2 is due online before the end of 2023. Rigetti fridge supplier Oxford Instruments is also hosting a system in its UK HQ in Oxfordshire.

Quantum startup QuEra operates a lab and data center near the Charles River in Boston, Massachusetts. The company offers access to its single 256-qubit Aquila system through AWS Braket as well as its own web portal. The company has two other machines in development.

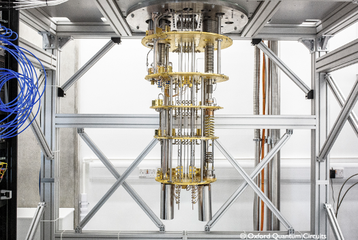

UK firm Oxford Quantum Circuits (OQC) operates the 4 qubit Sophia system, hosted at its lab in Thames Valley Science Park, Reading. It offers access to its systems out of its own private cloud and access to its 8-qubit Lucy quantum computer via AWS. It is also in the process of deploying systems to two colocation facilities in the UK and Japan- which have gone live since the writing of this piece.

Based out of Berkeley, California, Atom Computing operates one 100-qubit prototype system, and has two additional systems in development at its facility in Boulder, Colorado. In the near term, the company is soon set to offer access to its system via a cloud portal, followed by public cloud, and finally offering systems on-premise. NREL is an early customer.

Honeywell’s Quantinuum unit operates out of a facility in Denver, Colorado, where Business Insider reported the company has two systems in operation and a third in development. The 32-qubit H2 system reportedly occupies around 200 sq ft of space. The first 20 qubit H1 system was launched in 2020.

Quantum computers: Cloud vs on-prem

As with any cloud vs on-premise discussion, there’s no one-size-fits-all answer to which is the right approach.

Companies not wanting the cost and potential complexity of housing a quantum system may opt to go the cloud route. This offers immediate access to systems relatively cheaply, giving governments and enterprises a way to explore quantum’s capabilities and test some algorithms.

The trade-off is limited access based on timeslots and queues – a blocker for any real-world production uses – and potentially latency and ingress/egress complexities.

Cloud – either via public provider or direct from the quantum companies – is currently by far the most common and popular way to access quantum systems while everyone is still in the experimental phase.

There are multiple technologies and algorithms, and endless potential use cases. While end-users continue to explore their potential, the cost of buying a dedicated system is a difficult sell for most; prices to buy even single-digit qubit systems can reach more than a million dollars.

“It's still very early, but the demand is there. A lot of companies are really focused on building up their internal capabilities,” says Richard Moulds, general manager - Amazon Braket at AWS. “At the moment nobody wants to get locked into a technology. The devices are very different and it’s unclear which of these technologies will prevail in the end.”

“Mapping business problems and formatting them in a way that they can run a quantum computer is not trivial, and so a lot of research is going into algorithm formulation and benchmarking,” he adds. “Right now it's all about diversity, about hedging your bets and having easy access for experimentation.”

For now, however, the cloud isn’t ready for production-scale quantum workloads.

“There's some structural problems at the moment in the cloud; it is not set up yet for quantum in the same way we are classically,” says IonQ CEO Peter Chapman. “Today the cloud guys don't have enough quantum computers, so it's more like an old mainframe days; your job goes into a queue and when it gets executed depends on who's in front of you.

“They haven't bought 25 systems to be able to get their SLAs down,” he says.

On the other hand, they could offer dedicated time on a quantum computer, “which would be great for individual customers who want to run large projects, but to the detriment of everyone else because there’s still only one system.”

The balance of cloud versus on-premise will no doubt change in the future. If quantum computers reach ‘quantum supremacy,’ companies and governments will no doubt want dedicated systems to run their workloads constantly, rather than sharing access in batches.

Some of these dedicated systems will be hosted by a cloud provider or via the hardware provider as a managed service, and some will be hosted on-premise in enterprise data centers, national labs, and other owned facilities.

“Increasingly as these applications come online, corporations are likely going to need to buy multiple quantum computers to be able to put it into production,” suggests Chapman. “I'm sure the cloud will be the same; they might buy for example 50 quantum computers, so they can get a reasonable SLA so that people can use it for their production workloads.”

While hosting with a third party comes with its own benefits, some systems may be used on highly classified or commercially sensitive workloads, meaning entities may feel more comfortable hosting systems on-premise. Some computing centers may want on-premise systems as a way to lure more investment and talent to an area to create or evolve technology hubs.

In the near term, most of the people DCD spoke to expect on-premise quantum systems to be the realm of supercomputing centers – whether university or government – first as a way to further research into how quantum technology and algorithms work within hybrid architectures, and later to further develop the sciences.

“It might make sense for a large national university or a large supercomputing center to buy a quantum computer because they're used to managing infrastructure and have the budgets,” says Amazon’s Moulds.

Enterprises are likely to remain cloud-based users of quantum systems, even after labs and governments start bringing them in-house. But once specific and proven-out use cases emerge that might require constant access to a system – for example, any continuous logistics optimizations – the likelihood of having an on-premise system in an enterprise data center (or colocation facility) will increase.

While the cloud might always be an easy way to access quantum computers for all the same reasons companies choose it today for classical compute – including being closer to where companies store much of their data – it may also be a useful hedge against supply constraints.

“These machines are intriguing physical devices that are really hard to manufacture, and demand could certainly outstrip supply for a while,” notes Moulds. “When we get to the point of advantage, as a cloud provider, our job is to make highly scalable infrastructure available to the entire customer base, and to have to avoid the need to go buy your own equipment."

“We think of cloud as the best place for doing that for the same reason we think that it’s the best way to deliver every other HPC workload. If you've migrated to the cloud and you've selected AWS as your cloud provider, it's inconceivable that we would say go somewhere else to get your quantum compute resources.”

Fridges vs lasers means different quantum form factors

There are a number of different quantum technologies – trapped ion, superconducting, atom processors, spin qubits, photonics, quantum annealing – but they largely can be boiled down into a few camps; ones that use lasers and generally operate at room temperatures, and ones that need cooling with dilution refrigerators down to temperatures near absolute zero.

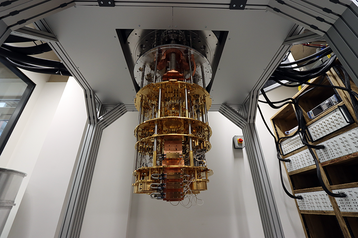

The most well-known images of quantum computers – featuring the ‘golden chandelier’ covered in wires – are supercooled. These systems – in development by the likes of Google, IBM, OQC, Silicon Quantum Computing, Quantum Motion, and Amazon – operate at temperatures in the low millikelvins.

With the chandelier design, the actual quantum chip is at the bottom. These sit within dilution refrigerators; large cryogenic cooling units that use helium-3 in closed-loop systems to supercool the entire system.

While the cooling technology these systems rely on is relatively mature – dilution fridges date back to the 1960s – it has traditionally been used for research in fields such as materials science. The need to place these units in production data centers as part of an SLA-contracted commercial service is a new step for suppliers.

Rigetti offers its QPUs both as a standalone unit and as a whole system including dilution refrigerators etc.; the price difference can reach into the millions of dollars.

Other quantum designs – including Atom, QuEra, Pasqal, and Quantinuum – rely on optical tables, manipulating lasers and optics to control qubits. While these systems remove the need for supercooling, these systems often have a large footprint.

These lasers can be powerful – and will grow more so as qubit counts grow – and are cooled by air or water, depending on the manufacturer. Atom’s current system sits on a 5ft by 12ft optical table.

IonQ uses lasers to actually supercool its qubits down to millikelvin temperatures; the qubits sit in a small vacuum chamber inside the overall system, meaning most of the system can operate using traditional technology. The company’s existing systems are generally quite large, but it is aiming to have rack-mounted systems available to customers in the next few years.

Rack-based quantum computers?

While the IT and data center industries have standardized around measuring servers in racks and ‘U’s, quantum computers are a long way off from fitting in your standard pizza box unit. Most of today’s low-qubit systems would struggle to squeeze into a whole 42U rack, and future systems will likely be even larger.

First announced in 2019, IBM’s System One is enclosed in a nine-foot sealed cube, made of half-inch thick borosilicate glass. Though Cleveland Clinic operates a 3MW, 263,000 sq ft data center in Brecksville, to the south of Cleveland, the IBM quantum system has been deployed at the company’s main campus in the city.

Jerry Chow, IBM fellow and director of quantum infrastructure research, previously told DCD: “The main pieces of work in any installation are always setting up the dilution refrigerator and the room temperature electronics."

While rack-mounted systems are available now from some vendors, many are lower-qubit systems that are only useful as research and testing tools. Many providers hope that one day useful quantum systems will be miniaturized enough to be rack-sized, but for now it's likely any systems targeting quantum supremacy will likely remain large.

“Some companies are building these very small systems within a rack or within a cart of sorts,” says Atom’s Rob Hayes. “But those are very small-scale experimental systems; I don't see that being a typical deployment model at large scale when we have millions of qubits. I don't think that's really the right deployment model.”

In the current phase of development, where QPU providers are looking to scale up qubit counts quickly, challenges around footprint remain ongoing.

With supercooled systems, the current trend is to ‘brute force’ cooling as qubit counts increase. More qubits require more cooling and more wires, which demands larger and larger fridges.

Current technologies, with incremental improvements, could see current cooling approaches scale up QPUs around 1,000 qubits. After that, networking multiple quantum systems will be required to continue scaling.

IBM is working with Bluefors to develop its Kide cryogenic platform; a smaller, modular system that it expects will enable it to connect multiple processors together. Blufors describes Kide as a cryogenic measurement system designed for large-scale quantum computing, able to support the measurement infrastructure required to operate more than 1,000 qubits, with a capacity of over 4,000 RF lines and 500kg of payload.

The hexagonal-shaped unit is about six times larger than the previous model, the XLD1000sl system. The vacuum chamber measures just under three meters in height and 2.5 meters in diameter, and the floor beneath it needs to be able to take about 7,000 kilograms of weight.

However, IBM has also developed its own giant dilution refrigerator to house future large-scale quantum computers. Known as Project Goldeneye, the proof-of-concept ‘super fridge’ can cool 1.7 cubic meters of volume down to around 25 mK - up from 0.4-0.7 meters on the fridges it was previously using. The Goldeneye fridge is designed for much larger quantum systems than it has currently developed. It can hold up to six individual dilution refrigerator units and weighs 6.7 metric tons.

For laser and optical table-based designs, companies are also looking at how to make the form factors for their systems more rack-ready.

“Our original system was built on an optical table,” says Yuval Boger, CMO of QuEra. “As we move towards shippable machines, then instead of just having an optical table that has sort of infinite degrees of flexibility on how you could rotate stuff, we’re making modules that are sort of hardcoded. You can only put the lens a certain way, so it's much easier to maintain.”

IonQ promises to deliver two rack-mounted systems in the future. The company recently announced a rack-mounted version of its existing 32-qubit Forte system – to be released in 2024 – as well as a far more powerful Tempo system in 2025. Renderings suggest Forte comprises eight racks, while Tempo will span just three.

“Within probably five years, if you were to look at one of our quantum computers you couldn't really tell if it was any different than any other piece of electronics in your data center,” IonQ’s Chapman tells DCD.

AWS’s Moulds notes quantum systems shouldn’t be looked at as replacement devices for traditional classical supercomputers. QPUs, he says, are enablers to improve clusters in tandem.

“These systems really are coprocessors or accelerators. You'll see customers running large HPC workloads and some portions of the workload will run on CPUs, GPUs, and QPUs. It's a set of computational tools where different problems will draw on different resources.”

Rigetti’s CTO David Rivas agrees, predicting a future where QPU nodes will be just another part of large traditional HPC clusters – though perhaps not in the same row as the CPU/GPU node.

“I'm pretty confident that quantum processors attached to supercomputer nodes is the way we're going to go. QPUs will be presented in an architecture of many nodes, some of which will have these quantum computers attached and relatively close.”

Powering quantum now and in the future

Over the last 50 years, classical silicon-based supercomputers have managed to push system performance to ever-increasing heights. But this comes with a price, with more and more powerful systems drawing ever-increasing amounts of energy.

The latest exascale systems are thought to require tens of megawatts – the 1 exaflops Frontier is thought to use 21MW, the 2 exaflops Aurora is thought to need a whopping 60MW.

Today’s quantum computers have not yet reached quantum supremacy. But assuming the technology does eventually reach the point where it is useful for even just a small number of specific workloads and use cases, they could offer a huge opportunity for energy saving.

Companies DCD spoke to suggest power densities on current quantum computers are well within tolerance compared to their classical HPC cousins – generally below 30kW – but could potentially increase computing power non-linearly to power needs.

QuEra’s Boger says his company’s 256-qubit Aquila neutral-atom quantum computer – a machine reliant on lasers on an optical table and reportedly equivalent in size to around four racks – currently consumes less than 7kW, but predicts its technology could allow future systems to scale to 10,000 qubits and still require less than 10kW.

For comparison, he says rival systems from some of the cloud and publicly listed quantum companies currently require around 25kW.

Other providers agree that laser-based systems will need more power as more qubits are introduced, but the power (and therefore cooling) requirements don’t scale significantly.

Atom CEO Hays says his company’s current systems require ‘a few tens of kilowatts’ for the whole system, including the accompanying classical compute infrastructure – equivalent to a few racks.

But as future generations of QPUs grow ‘magnitudes of order’ more powerful, power requirements may only double or triple.

While individual systems might not prevent an insurmountable challenge for data center engineers, it’s worth noting many experts believe quantum supremacy will likely require multiple systems networked locally.

“I think there's little question that some form of quantum networking technology is going to be relevant there for the many thousands or hundreds of thousands of qubits,” says Rigetti’s Rivas.

Quantum cloud providers of the future

In less than 12 months, generative AI has become a common topic across the technology industry. AI cloud providers such as CoreWeave and Lambda Labs have raised huge amounts of cash offering access to GPUs on alternative platforms to the traditional cloud providers.

This is driving new business to colo providers. 2023 has seen CoreWeave sign leases for data centers in Virginia with Chirisa and in Texas with Lincoln Rackhouse. CoreWeave currently offers three data center regions in Weehawken, New Jersey; Las Vegas, Nevada; and Chicago, Illinois. The company has said that it expects to operate 14 data centers by the end of 2023.

At the same time, Nvidia is rumored to be exploring signing its own data center leases to power its DGX Cloud service to better offer its AI/HPC cloud offering direct to customers as well as via the public cloud providers.

A similar model opportunity for colocation/wholesale providers could well pop up once quantum computers become more readily available with higher qubit counts and production-ready use cases.

“The same cloud service providers that dominate classical compute today will exist in quantum,” says Atom’s Hays. “But we could see the emergence of new service providers with differentiated technology that gives them an edge and allows them to grow and compete."

“I could see a future where we are effectively a cloud service provider, or become a box supplier to the major cloud service providers,” he notes. “If we are a major cloud service provider on our own, then I think we would still partner with data center hosters. I don't think we would want to go build a bunch of facilities around the world; we'd rather just leverage someone else’s.”

While all of the quantum companies DCD spoke to currently operate their systems (and clouds) on-premise, most said they would likely look to partner with data center firms in the future once the demand for cloud-based systems was large enough.

“We will probably always have space and we will probably always run some production systems,” says Rigetti’s Rivas. “But when we get to quantum supremacy, it will not surprise me if we start getting requests from our enterprise customers – including the public clouds – to colocate our machines in an environment like a traditional data center.”

Some providers have suggested they are already in discussions with some colocation/wholesale providers and exploring what dedicated space in a colo facility may look like.

“About two and a half years ago, I did go down and have a conversation with them about it,” Rivas adds. ”When I first told them what our requirements were, they looked at me like I was crazy. But then we said the materials and chemical piece we can work with, and I suspect you guys can handle the cooling if we work a little bit harder at this.”

“It's not a perfect fit at this point in time, but for the kinds of machines we build, I don't think it's that far off.”

Quantum data centers: In touching distance?

Hosting QPU systems will remain challenging for the near-to-medium term.

The larger dilution fridges are tall and heavy, and operators will need to get comfortable with cryogenic cooling systems sitting in their data halls. Likewise, optical table-based systems require large footprints now and this will continue in the future, along with requiring isolation to avoid vibration interference.

“You need a very clean and quiet environment in order to be able to reliably operate. I do see separate rooms or separate sections for quantum versus classical. It can be adjacent, just a simple wall between them,” says Atom’s Hayes.

“The way we've built our facility, we have a kind of hot/cold aisle. We have what you can think of as like a hot bay where we put all the servers, control systems, electronics, and anything that generates heat in this central hall. And then on the other side of the wall, where the quantum system sits, are individual rooms around 8 feet by 15 feet. We have one quantum system per room, and that way we can control the temperature, humidity, and sound very carefully.”

He notes, however, that data center operators could “pretty easily” accommodate such designs.

“It is pretty straightforward and something that any general contractor can go build, but it is a different configuration than your typical data center.”

Amazon’s own quantum computers are currently being developed at its quantum computing center in California, and that facility will host the first AWS-made systems that will go live on its Braket service.

“The way we've organized the building, we're thinking ahead to a world where there are machines exclusively used for customers and there are machines that are used exclusively by researchers,” AWS’s Moulds says. “We are thinking of that as a first step into the world of a production data center.”

When asked about the future and how he envisions AWS rolling out quantum systems at its data centers beyond the quantum center, Moulds says some isolation is likely.

“I doubt you'll see a rack of servers, and the fourth rack is the quantum device. That seems a long way away. I imagine these will be annexed off the back of traditional data centers; subject to the same physical controls but isolated.”

Rigetti is also considering what a production-grade quantum data center might look like.

“We haven't yet reached the stage of building out significant production-grade data centers as compared to an Equinix,” says CTO Rivas. “But that is forthcoming and we have both the space and a sense of where that's going to go.

“If you came to our place, it's not quite what you would think of as a production data center. It's sort of halfway between a production data center and a proper lab space, but more and more it's used as a production data center. It has temperature and humidity and air control, generators, and UPS, as well as removed roofing for electrical and networking.

“It also requires fairly significant chilling systems for the purposes of powering the dilution refrigerators and it requires space for hazardous chemicals including helium-3.”

As a production and research site, the data center “has people in more often than you would in a classical data center environment,” he says.

IonQ’s Chapman says his company’s Maryland site is ‘mundane.’

“There's a room which has got battery backup for the systems; there's a backup generator sitting outside that can power the data center; there’s multiple redundant vendors for getting to the Internet,” he says.

“The data room itself is fairly standard; AC air conditioning, relatively standard power requirements. There's nothing special about it in terms of its construction; we have an anti-static floor down but that's about it.”

“Installing and using quantum hardware should not require that you build a special building. You should be able to reuse your existing data center and infrastructure to house one of our quantum computers.”

UK quantum firm OQC has signed deals to deploy two low-qubit quantum computers in colocation data centers; one in Cyxtera’s LHR3 facility in Reading in the UK, and another in Equinix’s TY11 facility in Tokyo in Japan. OQC’s systems rely on dilution refrigerators, and will be the first time such systems are deployed in retail colocation environments.

The wider industry will no doubt be watching intently. Quantum computers are generally very sensitive – manipulating single atom-sized qubits is precise work. Production data centers are electromagnetically noisy and filled with the whirring of sonically noisy fans.

How quantum computers will behave in this space is still, like a superposed set of quantum states, yet to be determined.