Data center operators have been avoiding liquid cooling. Keeping it as a potential option for the future, but never a mainstream operational approach.

Liquid cooling proponents warned that rack power densities could only get so high before liquid cooling was necessary, but their forecasts were always forestalled. The Green Grid suggested that air cooling can only work up to around 25kW per rack, but AI applications threaten to go above that.

In the past, when rack power densities approached levels where air cooling could not perform, silicon makers would improve their chips’ efficiency, or cooling systems would get better.

Liquid cooling - an exotic option?

Liquid cooling was considered a last resort, an exotic option for systems with very high energy use. It required tweaks to the hardware, and mainstream vendors did not make servers designed to be cooled by liquid.

But all the world’s fastest supercomputers are cooled by liquid to support the high power density, and a lot of Bitcoin mining rigs have direct-to-chip cooling or immersion cooling, so their chips can be run at high clock rates.

Most data center operators are too conservative for that sort of thing, so they’ve backed off.

This year, that could be changing. Major announcements at the OCP Summit - the get-together for standardized data center equipment - centered on liquid cooling. And within those announcements, it’s now clear that hardware makers are making servers that are specifically designed for liquid cooling.

The reason is clear: hardware power densities are now reaching the tipping point: “Higher power chipsets are very commonplace now, we’re seeing 500W or 600W GPUs, and CPUs reaching 800W to 1,000W,” says Joe Capes, CEO of immersion cooling specialist LiquidStack. “Fundamentally, it just becomes extremely challenging to air cool above 270W per chip.“

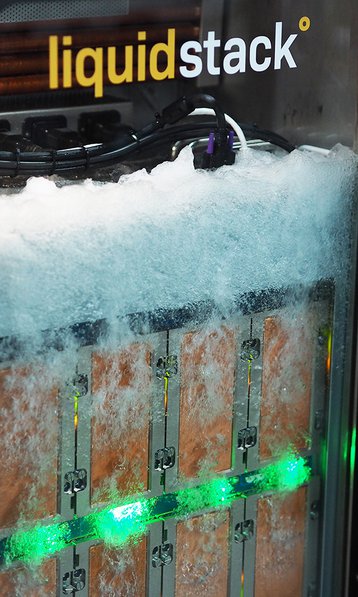

Alongside Wiwynn’s servers, for the third leg, LiquidStack has a partnership with 3M to use Novec 7000, a non-fluorocarbon dielectric fluid, which boils at 34°C (93°F) and recondenses in the company’s DataTank system, removing heat efficiently in the process.

Purpose-built servers are a big step because till now, all motherboards and servers have been designed to be cooled by air, with wide open spaces and fans. Liquid cooling these servers is a process of “just removing the fans and heat sinks, and tricking the BIOS - saying ‘You're not air cooled anymore.’”

That brings benefits, but the servers are bigger than they need to be, says Capes: “You have a 4U air cooled server, which should really be a 1U or a half-U size.”

LiquidStack is showing a 4U DataTank, which holds four rack units of equipment, and absorbs 3kW of heat per rack unit - equivalent to a density of 126kW per rack. The company also makes a 48U data rank, holding the equivalent of a full rack.

Standards

The servers in the tank are made by WiWynn, to the OCP’s open accelerator interface (OAI) specification, using standardized definitions for liquid cooling. This has several benefits across all types of liquid cooling.

For one thing, it means that other vendors can get on board, and know their servers will fit into tanks from LiquidStack or other vendors, and users should be able to mix and match equipment in the long term.

“The power delivery scheme is another important area of standardization,” says Capes, “whether it be through AC bus bar, or DC bus bar, at 48V, 24V or 12V.”

For another thing, the simple existence of a standard should help convince conservative data center operators it’s safe to adopt - if only because the systems are checked with all the possible components that might be used, so customers know they should be able to get replacements and refills for a long time.

Take coolants: “Right now the marketplace is adopting 3M Novex 649, a dielectric with a low GWP (global warming potential)”, says Capes. “This is replacing refrigerants like R410A and R407C that have very high global warming potential and are also hazardous.

“It's very important when you start looking at standards, particularly in the design of hardware, that you're not using materials that could be incompatible with these various dielectric fluids, whether they be Novec or fluorocarbon, or a mineral oil or, or synthetic oil. That's where OCP is really contributing a lot right now.

An organization like OCP will kick all the tires, including things like the safety and compatibility of connectors, and the overall physical specifications.

“I've been talking recently with some colocation providers around floor load weighting,” says Capes. “It's a different design approach to deploy data tanks instead of conventional racks, you know, 600mm by 1200mm racks.” A specification tells those colo providers where it’s safe to put tanks, he says: “by standardizing and disseminating this information, it helps more rapidly enable the market to use different liquid cooling approaches.”

In the specific case of LiquidStack, the OCP standard did away with a lot of excess material, cutting the embodied footprint of the servers, says Capes: “There's no metal chassis around the kit. It's essentially just a motherboard. The sheer reduction in space and carbon footprint by eliminating all of this steel and aluminum and whatnot is a major benefit.”

Pushing the technology

Single-phase liquid cooling vendors emphasize the simplicity of their solutions. The immersion tanks may need some propellors to move the fluid around but largely use convection. There’s no vibration caused by bubbling, so vendors like GRC and Asperitas say equipment will last longer.

“People talk about immersion with a single stroke, and don’t differentiate between single-phase and two-phase," GRC CEO Peter Poulin said in a DCD interview, arguing that single-phase is the immersion cooling technique that’s ready now.

But two-phase allows for higher density, and that can potentially go further than the existing units.

Although hardware makers are starting to tailor their servers to use liquid cooling, they’ve only taken the first steps of removing excess baggage and putting things slightly closer together. Beyond this, equipment could be made which simply would not work outside of a liquid environment.

“The hardware design has not caught up to two-phase immersion cooling,” says Capes. “This OAI server is very exciting, at 3kW per RU. But we’ve already demonstrated the ability to cool up to 5.25 kilowatts in this tank.“

Beyond measurement

The industry’s efficiency measurements are not well-prepared for the arrival of liquid cooling in quantity, according to Uptime research analyst Jacqueline Davis.

Data center efficiency has been measured by power usage effectiveness (PUE), a ratio of IT power to facility power. But liquid cooling undermines how that measurement is made, because of the way it simplifies the hardware.

“Direct liquid cooling implementations achieve a partial PUE of 1.02 to 1.03, outperforming the most efficient air-cooling systems by low single-digit percentages,” says Davis. “But PUE does not capture most of DLC’s energy gains.”

Conventional servers include fans, which are powered from the rack, and therefore their power is included in the “IT power” part of PUE. They are considered part of the payload the data center is supporting.

When liquid cooling does away with those fans, this reduces energy, and increases efficiency - but harms PUE.

“Because server fans are powered by the server power supply, their consumption counts as IT power,” points out Davis. “Suppliers have modeled fan power consumption extensively, and it is a non-trivial amount. Estimates typically range between five percent and 10 percent of total IT power.”

There’s another factor though. Silicon chips heat up and waste energy due to leakage currents - even when they are idling. This is one reason for the fact that data center servers use almost the same power when they are doing nothing, a shocking level of waste, which is not being addressed because the PUE calculation ignores it.

Liquid cooling can provide a more controlled environment, where leakage currents are lower, which is good. Potentially, with really reliable cooling tanks, the electronics could be designed differently to take advantage of this, allowing chips to resume their increases in power-efficiency.

That’s a good thing - but it raises the question of how these improvements will be measured, says Davis: “If the promise of widespread adoption of DLC materializes, PUE, in its current form, maybe heading toward the end of its usefulness.”

Reducing water

“The big reason why people are going with two-phase immersion cooling is because of the low PUE. It has roughly double the amount of heat rejection capacity of cold plates or single-phase,” says Capes. But a stronger draw may turn out to be the fact that liquid cooling does not use water.

Data centers with conventional cooling systems, often turn on some evaporative cooling when conditions require, for instance, if the outside air temperature is too high. This means running the data center chilled water through a wet heat exchanger, which is cooled by evaporation.

“Two-phase cooling can reject heat without using water,” says Capes. And this may be a factor for LiquidStack’s most high-profile customer: Microsoft.

There’s a LiquidStack cooling system installed at Microsoft’s Quincy data center, alongside an earlier one made by its partner Wiwynn. “We are the first cloud provider that is running two-phase immersion cooling in a production environment,” Husam Alissa, a principal hardware engineer on Microsoft’s team for data center advanced development said of the installation.

Microsoft has taken a broader approach to its environmental footprint than some, with a promise to reduce its water use by 95 percent before 2024, and to become “water-positive” by 2030, producing more clean water than it consumes.

One way to do this is to run data centers hotter and use less water for evaporative cooling, but switching workloads to cooling by liquids with no water involved could also help. “The only way to get there is is by using technologies that have high working fluid temperatures,” says Capes.

Industry interest

The first sign of the need for high-performance liquid cooling has been the boom in hot chips: “The semiconductor activity really began about eight to nine months ago. And that's been quickly followed by a very dynamic level of interest and engagement with the primary hardware OEMs as well.”

Bitcoin mining continues to soak up a lot of it, and recent moves to damp down the Bitcoin frenzy in China have pushed some crypto facilities to places like Texas, which are simply too hot to allow air cooling of mining rigs.

But there are definite signs that customers beyond the expected markets of HPC and crypto-mining are taking this seriously.

“One thing that's surprising is the pickup in colocation,” says Capes. “We thought colocation was going to be a laggard market for immersion cooling, as traditional colos are not really driving the hardware specificaitons. But we've actually now seen a number of projects where colos are aiming to use immersion cooling technology for HPC applications”

He adds: “We've been surprised to learn that some are deploying two-phase immersion cooling in self-built data centers and colocation sites - which tells me that hyperscalers are looking to move to the market, maybe even faster than what we anticipated.”

Edge cases

Another big potential boom is in the Edge, micro-facilities are expected to serve data close to applications.

Liquid cooling scores here, because it allows compact systems which don’t need an air-conditioned space.

“By 2025, a lot of the data will be created at the Edge. And with a proliferation of micro data centers and Edge data centers, compaction becomes important,” says Capes. Single-phase cooling should play well here, but he obviously prefers two-phase.

“With single phase, you need to have a relatively bulky tank, because you're pumping the dielectric fluid around, whereas in a two-phase immersion system you can actually place the server boards to within two and a half millimeters of one another," he said.

How far will this go?

It’s clear that we’ll see more liquid cooling, but how far will it take over the world? “The short answer is the technology and the chipsets will determine how fast the market moves away from air cooling to liquid cooling,” says Capes.

Another factor is whether the technology is going into new buildings or being retrofitted to existing data centers - because whether it’s single-phase or two-phase, a liquid cooled system will be heavier than its air cooled brethren.

Older data centers simply may not be designed to support large numbers of immersion tanks.

“If you have a three-floor data center, and you designed your second and third floors for 250 pounds per square foot of floor loading, it might be a challenge to deploy immersion cooling on all those floors,” says Capes.

“But the interesting dynamic is that because you can radically ramp up the amount of power per tank, you may not need those second and third floors. You may be able to accomplish on your ground floor slab, what you would have been doing on three or four floors with air cooling.”

Some data centers may evolve to have liquid cooling on the ground floor’s concrete slab base, and any continuing air cooled systems will be in the upper floors.

But new buildings may be constructed with liquid cooling in mind, says Capes: “I was talking to one prominent colocation company this week, and they said that they're going to design all of their buildings to 500 pounds per square foot to accommodate immersion cooling.”

Increased awareness of the water consumption of data centers may push the adoption faster: “If other hyperscalers come out with aggressive targets for water reduction like Microsoft has, then that will accelerate the adoption of liquid cooling even faster.”

If water cooling hits a significant proportion of the market, say 20 percent, that will kick off ”a transition, the likes of which we’ve never seen,” says Capes. “It's hard to say whether that horizon is, is on us in five years or 10 years, but certainly if water scarcity, and higher chip power continue to evolve as trends, I think we'll see more than half of the data centers liquid cooled.”