Carver Mead is in no rush. As a pioneer in microelectronics, helping develop and design semiconductors, digital chips and silicon compilers, Mead has spent his life trying to move computing forward.

“We did a lot of work on how to design a VLSI chip that would make the best computational use of the silicon,” Mead told DCD.

A whole new architecture

Mead was the first to predict the possibility of storing millions of transistors on a chip. Along with Lynn Conway, he enabled the Mead & Conway revolution in very-large-scale integration (VLSI) design, ushering in a profound shift in the development of integrated circuits in 1979.

Even then, he realized that “the fundamental architecture is vastly underutilizing the potential of the silicon by maybe a factor of a thousand. It got me thinking that brains are founded on just completely different principles than we knew anything about.”

Others had already begun to look to the brain for new ideas. In 1943, neurophysiologist Warren McCulloch and mathematician Walter Pitts wrote a paper on how neurons in the brain might work, modeling the first neural network using electrical circuits.

“The field as a whole has learned one thing from the brain,” Mead said. “And that is that the average neuron has several thousand inputs.”

This insight, slowly developed with increasingly advanced neural networks, led to what we now know as deep learning. “That’s big business today,” Mead said. “And people talk about it now as if we’ve learned what the brain does. That’s the furthest thing from the truth. We’ve learned only the first, the most obvious thing that the brain does.”

Working with John Hopfield and Richard Feynman in the 1960s, Mead realized that there was more that we could do with our knowledge of the brain, using it to unlock the true potential of silicon.

“Carver Mead is a pivotal figure, he looked at the biophysical firing process of the neurons and synapses, and - being an incredibly brilliant scientist - saw that it was analogous to the physics within the transistors,” Dr Dharmendra S. Modha, IBM Fellow and Chief Scientist for Brain-inspired Computing, told DCD. “He used the physics of the transistors and hence analog computing to model these processes.”

Mead called his creation neuromorphic computing, envisioning a completely new type of hardware that is different from the von Neumann architecture that runs the vast majority of computing hardware to this day.

The field has grown since, bringing in enthusiasts from governments, corporations and universities, all in search of the next evolutionary leap in computational science. Their approaches to neuromorphic computing differ; some stay quite close to Mead’s original vision, outlined in the groundbreaking 1989 book Analog VLSI and Neural Systems, while others have charted new territories. Each group believes that their work could lead to new forms of learning systems, radically more energy efficient processors, and unprecedented levels of fault-tolerance.

SpiNNaker's take

To understand one of these approaches, we must head to Manchester, England, where a small group of researchers led by Professor Steve Furber hopes to make machine learning algorithms “even more brain-like.”

“I’d been designing processors for 20 years and they got a thousand times faster,” the original architect of the Arm CPU told DCD. “But there were still things they couldn’t do that even human babies manage quite easily, such as computer vision and interpreting speech.”

Furber wanted to understand what was “fundamentally different about the way that conventional computers process information and the way brains process information. So that led me towards thinking about using computers to try and understand brains better in the hope that we’d be able to transfer some of that knowledge back into computers and build better machines.”

The predominant mode of communication between the neurons in the brain is through spikes, “which are like pure unit impulses where all the information is conveyed simply in the timing of the spikes and the relative timing of different spikes from different neurons,” Furber explained.

In an effort to mimic this, developers have created spiking neural networks, which use the frequency of spikes, or the timing between spikes, to encode information. Furber wanted to explore this, and built the SpiNNaker (Spiking Neural Network Architecture) system.

SpiNNaker is based on the observation that modeling large numbers of neurons is an "embarrassingly parallel problem,” so Furber and his team used a large number of cores for one machine - hitting one million cores in October 2018.

The system still relies on the von Neumann architecture: true to his roots, Furber uses 18 ARM968 processor nodes on a System-on-Chip that was designed by his team. To replicate the connectivity of the brain, the project maps each spike into a packet, in a packet switch fabric. "But it’s a very small packet and the fabric is intrinsically multi-cast so when a neuron that is modeled in the processor spikes, it becomes a packet and then gets delivered to up to 10,000 different destinations.”

The nodes communicate using simple messages that are inherently unreliable, a “break with determinism that offers new challenges, but also the potential to discover powerful new principles of massively parallel computation.”

Furber added: “The connectivity is huge, so conventional computer communication mechanisms don’t work well. If you take a standard data center arrangement with networking, it’s pretty much impossible to do real-time brain modeling on that kind of system as the packets are designed for carrying large amounts of data. The key idea in SpiNNaker is the way we carry very large numbers of tiny packets with the order of one or two bits of information.”

The project's largest system, aptly named The Big Machine, runs in a disused metal workshop at the University of Manchester’s Kilburn Building. “They cleared out the junk that had accumulated there and converted it into a specific room for us with 12 SpiNNaker rack cabinets that in total require up to 50 kilowatts of power,” Furber said.

But the system has already spread: “I have a little map of the world with where the SpiNNaker systems are and we cover most continents, I think apart from South America.” In the second quarter of 2020, Furber hopes to tape out SpiNNaker 2, which “will go from 18 to 160 cores on the chip - Arm Cortex-M4Fs - and just by using a more up-to-date process technology and what we’ve learnt from the first machine, it’s fairly easy to see how we get to 10x the performance and energy efficiency improvements.”

The project has been in development for over a decade, originally with UK Research Council funding, but as the machine was being created, "this large European Union flagship project called the Human Brain Project came along and we were ideally positioned to get involved,” Furber said.

The Human Brain Project

One of the two largest scientific projects ever funded by the European Union, the €1bn ($1.15bn), decade-long initiative aims to develop the ICT-based scientific research infrastructure to advance neuroscience, computing and brain-related medicine.

Started in 2013, the HBP has brought together scientists from over 100 universities, helping expand our understanding of the brain and spawning somewhat similar initiatives across the globe, including BRAIN in the US and the China Brain Project.

“I think what’s pretty unique in the Human Brain Project is that we have that feedback loop - we work very, very closely with neuroscientists,” Professor Karlheinz Meier, one of the original founders of the HBP, said.

“It’s neuroscientists working in the wetlabs, those doing theoretical neuroscience, developing principles for brain computation - we work with them every day, and that is really something which makes the HBP very special.”

Meier is head of the HBP’s Neuromorphic Computing Platform sub-project, which consists of two programs - SpiNNaker and BrainScaleS, Meier’s own take on neuromorphic computing.

“In our case we really have local analog computing, but it’s not, as a whole, an analog computer. The communication between neurons is taking place with the stereotypical action potentials in continuous time, like in our brain. Computationally, it’s a rather precise copy of the brain architecture.”

It differs from Mead’s original idea in some regards, but is one of the closest examples of the concept currently available. “That lineage has found its way to Meier,” IBM’s Modha said.

As an analog device, the system is capable of unprecedented speed at simulating learning. Conventional supercomputers can run 1,000 times slower than biology, while a system like SpiNNaker works in real-time - “if you want to simulate a day, it takes a day, or a year - it takes a year, which is great for robotics but it’s not good to study learning,” Meier said. “We can compress a day to 10 seconds and then really learn how learning works. You can only do this with an accelerated computing system, which is what the BrainScaleS system is.”

The project predates the HBP, with Meier planning to use the knowledge gained from the HBP for BrainScaleS 2: “One innovation is that we have an embedded processor now where we can emulate all kinds of plasticity, as well as slow chemical processes which are, so far, not taken into account in neuromorphic systems.”

For example, the effect of dopamine on learning is an area “we now know a lot more about - we can now emulate how reward-based learning works in incredible detail.“

Another innovation concerns dendrites, the branched protoplasmic extensions of a nerve cell, as active elements that form part of the neuronal structure. “That, hopefully, will allow us to do learning without software. So far our learning mechanisms are mostly still implemented in software that controls neuromorphic systems, but now we are on the way to using the neuronal structure itself to do the learning.”

Meier’s work is now being undertaken at the European Institute for Neuromorphic Computing, a new facility at the University of Heidelberg, Germany. “It’s paradise: We have all the space we need, we have clean rooms, we have electronics workshops, we have an experimental hall where we build our systems.”

A full-size prototype of BrainScaleS 2 is expected by the end of 2018 and a full-sized system in EINC by the end of the HBP in 2023.

Cutting-edge research may yield additional advances. “I’m just coming from a meeting in York in the UK,” Meier told DCD as he was waiting for his return flight. “It was about the role of astrocytes, which are not the neural cells that we usually talk about, they are not neurons or chemical synapses; these are cells that actually form a big section of the brain, the so-called glial cells.

“Some people say they are just there to provide the brain with the necessary energy to make the neurons operational, but there are also some ideas that these glial cells contribute to information processing - for example, to repair processes,” he said. At the moment, the glial cells are largely ignored in mainstream neuromorphic computing approaches, but Meier believes there’s a possibility that these overlooked cells "play an important role and, with the embedded processors that we have on BrainScaleS 2, it could be another new input to neuromorphic computing. That’s really an ongoing research project.”

Indeed, our knowledge of the brain remains very limited. “Understanding more about the brain would definitely help,” Mike Davies, director of Intel’s Neuromorphic Computing Lab, told DCD with a laugh. “To be honest we don’t quite know enough.”

But the company does believe it knows enough to begin to “enable a broader space of computation compared to today’s deep learning models.”

Low-eee-hee

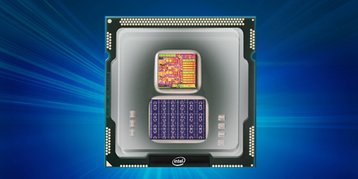

In early 2018, Intel unveiled Loihi, its first publicly announced neuromorphic computing architecture. “Loihi is the chip that we’ve recently completed and published - it’s actually the fourth chip that’s been done in this program, and it’s five chips now, one of which is in 10nm.”

Intel’s take on the subject lies somewhere between SpiNNaker and BrainScaleS. “We’ve implemented a programmable feature set which is faithful to neuromorphic architectural principles. It is all distributed through the mesh in a very fine-grained parallel way which means that we believe it is going to be very efficient for scaling and performance compared to a von Neumann type of an architecture approach.”

In late 2018, Loihi saw its first large deployment, with up to 768 chips integrated in a P2P mesh-based fabric system known as Pohoiki Springs. This system, run from the company’s lab in Oregon, will be available to researchers: “We can launch and explore the space of spiking neural networks with evolutionary search techniques. We will be spawning populations of networks, evaluating their fitness concurrently and then breeding them and going through an evolutionary optimization process - and for that, of course, you need a lot of hardware resource.”

The concept of relying on a more digital approach can perhaps be traced to Modha, who spent the early 2000s working on “progressively larger brain simulations at the scale of the mouse, the rat, the cat, the monkey and eventually at the scale of the human,” he told DCD (for more on brain simulation, see the fact file below).

“This journey took us through three generations of IBM Blue Gene supercomputer - Blue Gene L, P, and Q, and the largest computer simulation we did had 1,014 synapses, the same scale as the human brain. It required 1.5 million processors, 1.5 petabytes of main memory and 6.3 million threads, and yet the simulation ran 1,500 times slower than real-time. To run the simulation in real-time would require 12 gigawatts of power, the entire power generation capability of the island nation of Singapore.”

Faced with this realization, “the solution that we came up with was that it’s not possible to have the technologies the brain has, at least today - it may be possible in a century - but what we can achieve today is to look at the reflection of the brain.

“We broke apart from not just the von Neumann architecture, but the analog-neuron vision of Carver Mead himself,” Modha said. “While the brain - with its 20W of power, two-liter volume, and incredible capability - remains the ultimate goal, the path to achieve it must be grounded in the mathematics of what is architecturally possible. We don’t pledge strict allegiance to the brain as it is implemented in its own organic technology, which we don’t have access to.”

Instead, his team turned to a hypothesis within neuroscience that posits that the mammalian cerebral cortex “consists of canonical cortical microcircuits, tiny little clumps of 200-250 neurons, that are stylized templates that repeat throughout the brain, whether you are dealing with sight, smell, touch, hearing - it doesn’t matter.”

In 2011, the group at the IBM Almaden Research Center put together a tiny neurosynaptic core “that was meant to approximate or capture the essence of what a canonical cortical microcircuit looks like. This chip had 256 neurons, it was at the scale of a worm’s brain, C. elegans.

“Even though it was a small chip, it was the world’s first fully digital deterministic neuromorphic chip. It was the seed for what was to come later.”

After several iterations, they created TrueNorth, a chip with 4,096 neurosynaptic cores, 1 million neurons, and 256 million synapses at the scale of a bee brain.

IBM's journey was not one it undertook alone - the company received $70m in funding from the US military, with the Defense Advanced Research Projects Agency (DARPA) launching the Systems of Neuromorphic Adaptive Plastic Scalable Electronics (SyNAPSE) program to fund this and similar endeavors.

“Dr Todd Hilton, the founding program manager for the SyNAPSE program, saw that neurons within the brain have high synaptic fan-outs, and neurons within the brain can connect up to 10,000 other neurons, whereas prevailing neuromorphic engineering focused on neurons with very low synaptic fan-out,” Modha said. That idea helped with the development of TrueNorth, and advanced the field as a whole.

“DARPA can make a big difference, it’s showed a lot of interest in the general area because I think it smells that the time is right for something,” Mead told DCD, the day after giving a talk on neuromorphic computing at the agency's 60th anniversary event.

IBM is building larger systems, finding some success within the US government. Three years ago it tiled 16 TrueNorth chips in a four-by-four array, with 16 million neurons, and delivered the machine to the Department of Energy’s Lawrence Livermore National Laboratory to simulate the decay of nuclear weapons systems.

“In 2018 we took four such systems together and integrated them to create a 64 million neuron system, fitting within a 4U standard rack mounted unit,” Modha said.

Christened Blue Raven, the system is being used by the Air Force Research Laboratory “to enable new computing capabilities... to explore, prototype and demonstrate high-impact, game-changing technologies that enable the Air Force and the nation to maintain its superior technical advantage,” Daniel S. Goddard, director of information directorate at AFRL, said last year.

But with TrueNorth, perhaps the most commercially successful neuromorphic chip on the market, still mainly being used for prototyping and demonstration, it is fair to say the field still has some way to go.

Those at Intel, IBM and BrainScaleS see their systems having an impact in as few as five years. “There is a case that can be made across all segments of computing,” Davies said.

“In fact it’s not exactly clear what the most promising domain is for nearest term commercialization of that technology. It’s easy to imagine use cases at the edge, and robotics is a very compelling space, but you can go all the way up to the data center, and the high end supercomputer.”

Others remain cautiously optimistic. “We don’t have any plans to incorporate neuromorphic chips into Cray’s product line,” the supercomputing company’s EMEA research lab director Adrian Tate told DCD. Arm Research’s director of future silicon technology, Greg Yeric, added: “I can see that it’s still in its infancy. But I think there’s a lot to gain - the people on the skeptic side are going to be wrong, I think it’s got some legs.”

Carver Mead, meanwhile, is not too worried. He is reminded of those early days of neural networks, of the excitement, the conferences and the articles. “This was the ‘80s, it became very popular, but it didn’t solve any real problems, just some toy problems.”

The hype was followed by disillusionment, and “it went into a winter where there was no funding and no interest, nobody writing stories. It was a bubble that didn’t really go anywhere.”

That is, until it did. “It took 30 years - people working under the radar with no one paying the slightest attention except this small group of people just plugging away - and now you’ve got the whole world racing to do the next deep learning thing. It’s huge.”

When Mead thinks of the field he helped create, he remembers that winter, and how it thawed. “There will certainly come a time when neuromorphic chips are going to be so much more efficient, so much faster, so much more cost effective, so much more real time than anything you can do with the great big computers that we have now.”

--

Professor Karlheinz Meier (1955-2018)

On 24 October, after this article was originally published, Professor Karlheinz Meier tragically and unexpectedly passed away, at the age of 63.

His colleagues' tribute to his extraordinary contribution to the Human Brain Project, and to the scientific field as a whole, can be read here.

This feature appeared in the October/November issue of DCD Magazine. Subscribe for free today: