Having an annualized run-rate of $18 billion and millions of active customers, Amazon Web Services (AWS) is the largest cloud platform in the world, with a staggering 44.1 percent market share, according to Gartner.

The comfortable lead certainly doesn’t stop the cloud giant from regularly launching new features and enhancements, as the more than 40,000 developers and IT professionals gathered in Las Vegas found out.

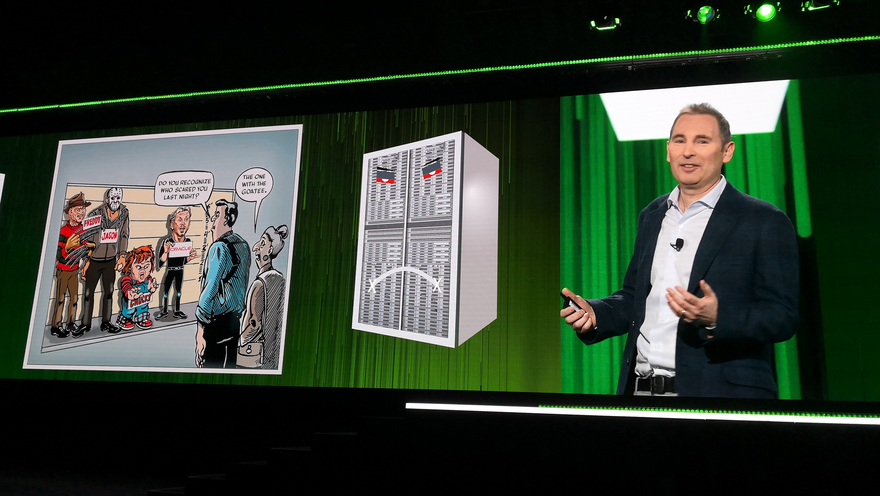

At this year’s re:Invent, AWS introduced new serviced like Amazon Neptune, added new types of server instances, revealed some details about the inner workings of the platform, namely the load balancer, and poked fun at the competition.

Broader horizons

“AWS has incredibly robust and diverse features in the cloud with over a hundred services,” AWS CEO Andy Jassy said during his keynote.

“This is the most functional, the most capable technological infrastructure by far. The pace of innovation is continuing to expand. You won’t find others with more than half of what AWS has.”

Jassy took the wraps off a plethora of new services and announced improvements to existing ones. These included the preview of a managed graph database service called Amazon Neptune, and new capabilities for the company’s current database offerings that enhance their scalability and reliability.

For instance, multi-master and multi-region support for Amazon DynamoDB makes it trivial for developers to add high-performance and low-latency for globally distributed applications. Elsewhere, the managed Amazon Aurora gains multi-master support with multiple write master nodes across multiple Availability Zones (AZs) – extremely tricky to configure with most database engines – allowing applications to transparently tolerate failures of any master without additional tweaks or special logic.

The company also sought to offer more choice, as evidenced with the launch of a new AWS I3 Bare Metal instance. This option will allow customers to run their applications directly on an Intel server, gaining access to as much as 512GB of memory and 36 hyperthreaded cores. Because it operates as part of the AWS cloud, developers can access features of a normal EC2 instance such as security group settings and Amazon Elastic Block Storage (Amazon EBS) volumes.

This new capability dramatically increases AWS’s reach in the cloud, and puts pressure on colocation providers which have relied on the premise of enterprises opting for hybrid cloud deployments for systems that cannot be virtualized. With the new I3 instance, enterprises now have one less reason to choose complex hybrid architectures over an all-cloud strategy.

Inventing a better wheel

Of course, not all the improvements are visible, or available for public consumption. Speaking at the customary “Tuesday Night Live” session held the evening before the main event, Peter Desantis, vice president of AWS global infrastructure, detailed some of the core engineering improvements that the team had made under the hood.

Much of the information that Desantis shared was already in the public domain, including materials that were covered by AWS’s lead data center architect, James Hamilton, at the same session last year. However, Desantis also offered additional insights into the company’s proprietary load balancer.

The load balancer was taking up disproportionate amounts of space and imposing various operational challenges on AWS, he said, referring to devices created by external vendors. “The box usually has some internal resources that have some unknown limit. If you’re not the one building [it], it’s hard to know when things might break.”

Then there was the issue of reliability. Desantis pulled out network schematics to illustrate how the traditional 2N architecture of load balancers could survive just one failure before it was operating without a redundancy: “As an operator, I can tell you that I don’t sleep well at night when I am one failure away from customer impact.”

These concerns inspired the company to develop a load balancer that offers better reliability due to the inherent ability to store traffic states across three different hosts. The self-built solution also increased utilization significantly: “[The new system] can run at 80-90 percent capacity depending on how you configure it. Very different from traditional load balancers [about 50 percent].”

The distributed load balancing technology that AWS created has been improved further, and now underpins many of its cloud services, including the AWS Elastic File System, AWS Managed NAT, AWS Network Load Balancer and AWS PrivateLink, Desantis said.

Let builders build

Does AWS intend to add another hundred new services to its cloud? It does seem that way, given the number of capabilities announced last week alone, including machine learning (ML), video workflows, virtual reality, networking, Internet of Things, and even an ML-powered security monitoring service.

Speaking on the company’s expanded support for containers, Jassy offered a clue in a statement: “This is the bar for compute. This is what Builders want. Because they don’t have to pay for any of the features upfront. Builders don’t want to settle for less than the functionality that others have. They realize that having everything, is everything.”

The AWS team wants to deliver the most versatile array of tools for developers, he said. “Builders don’t want to settle for a fraction of the capabilities they are used to, when they move to the cloud.”

Jassy also offered some thoughts on the freedom of choice. While some will point to the irony, given the very real threat of being locked into AWS’ cloud ecosystem, the CEO clearly had his sights on traditional enterprise software vendors as he took a dig at Oracle: “Freedom is not just having all the capability to build anything that you want. But it is also the ability not to be locked in an abusive or one-size-fits-all characterization and tools.”