Project Natick puts servers in a rack in a can at the bottom of the deep blue sea

Microsoft Research has deployed a small data center in a watertight container at the bottom of the ocean - and even delivered its Azure cloud form the sea bed.

Underwater data centers can be cooled by the surrounding water, and could also be powered by wave or tidal energy, says the Project Natick group at Microsoft Research, which deployed an experimental underwater data center one kilometer off the Pacific coast of the US between August and November of 2015.

Azure sea

The project aims to show it is possible to quickly deploy “edge” data centers in the sea, close to people who live near the coast: ”Project Natick is focused on a cloud future that can help better serve customers in areas which are near large bodies of water,” says the site, pointing out that half the world’s population are near coastlines.

“The vision of operating containerized datacenters offshore near major population centers anticipates a highly interactive future requiring data resources located close to users,” the project explains. “Deepwater deployment offers ready access to cooling, renewable power sources, and a controlled environment.”

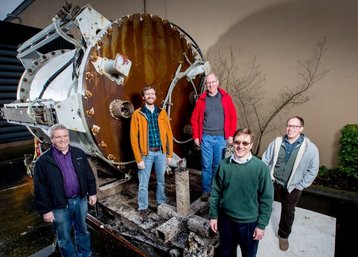

The group put one server rack inside a cylindrical vessel, named Leona Philpot after a character in the Halo Xbox game. The eight-foot (2.4m) diameter cylinder was filled with unreactive nitrogen gas, and sat on the sea bed 30 ft (9m) down, connected to land by a fiber optic cable.

According to a report in the New York Times, the vessel was loaded with sensors to prove that servers would continue working the vessel wouldn’t leak, and would not affect local marine life. These aims were achieved, and the group went ahead and deployed commercial Azure web loads on the system.

The sea provided passive cooling, but further tests this year might place a vessel neare hydroelectric power sources off the coast of Florida or Europe.

From concept to seabed

The Project had its origins in a 2013 white paper, which got backing in 2014 and, a year later, deployed Leona Philpot. This version of the concept uses current computer hardware, but if it makes it into wider deployment, the equipment would most likely be redesigned to fit the requirements of underwater deployment. Most importantly, the equipment would have to run for years without any physical attention, but the team thinks this could be the norm in future.

“With the end of Moore’s Law, the cadence at which servers are refreshed with new and improved hardware in the datacenter is likely to slow significantly,” says the project site. ”We see this as an opportunity to field long-lived, resilient datacenters that operate ‘lights out’ – nobody on site – with very high reliability for the entire life of the deployment, possibly as long as ten years.”

The first commercial version - if a commercial version is made - would have a 20 year lifespan, and would be restocked with equipment every five years, as that’s the current useful lifespacn for data center hardware.

Mass produced underwater data centers could be deployed in 90 days, and would most likely have a redesigned rack system to make better use of an unattended cylindrical space. Widely-deployed Natick units would be made from recycled material, says the group, also making the point that using the water for energy and cooling would effectively make this a “zero emission” site as the energy taken from the environment would be returned there.

But will it be commercialized? ”Project Natick is currently at the research stage,” the group comments. “It’s still early days in evaluating whether this concept could be adopted by Microsoft and other cloud service providers.”

Update: Today. Microsoft has published a long blog post about Project Natick, and video, embedded below.