Google wants to be known for good security. It wants users to trust it with their content and their business processes. To achieve this, its cloud-based services have to do a demonstrably better job than its rivals, and better job than customer in-house security teams.

It’s a long game, but the company believes it is surfing the wave of change. ”The cloud… is the only viable solution [for security-conscious companies],” Niels Provos, a distinguished Google engineer, said at last month’s Google Cloud Next conference, pointing to how a cloud platform like Google Cloud offers professionally managed infrastructure for business.

Provos said: “The homogeneity of our infrastructure, and our integrated and comprehensive stance on security, reduces complexity and allows us to deliver security [by] default and at scale.” And Google takes the job very seriously, going by the more than 700 software engineers and security engineers that it has on its payroll.

But how exactly does the cloud giant secure itself against attackers, including state-sponsored attempts to hack its private fiber networks? We put together the pieces.

Securing a Google data center

When it comes to security, Google is a firm believer in defense in depth. This is evident from the physical security features of its data centers, where multiple layers of checks ensure that only authorized personnel can enter the facility.

Only employees on a pre-authorized list, consisting of a very small fraction of all Google employees, are allowed through the gates of a Google data center campus. A second check is required before one can enter the building.

Finally, stepping into the secure corridor leading into the data hall requires clearing a biometric lock which could involve an iris scan, Joe Kava, vice president of Google’s data center operations, explained in a 2014 video.

Inside, the facility is segregated for security, with key areas protected by laser-based underfloor intrusion detection systems. Other tools in Google’s physical security arsenal include metal detectors, vehicle barriers, and your usual networked cameras - but monitored with video analysis software.

Within the data center, Google implements a rigorous end-to-end chain-of-custody for hard drives to ensure that every storage device is accounted for. Drives that fail or are scheduled for replacement are first checked out of the server, using a barcode scanner, and brought to a secure area for erasure.

All drives are wiped prior to disposal; drives that cannot be verified as having been securely erased are physically crushed, then shredded into pieces with an industrial wood chipper. You can see that happening here (video).

Engineered to trust nothing

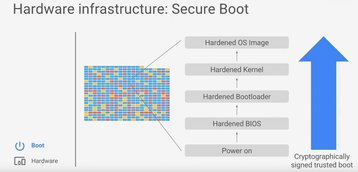

Physical features aside, Google has designed its entire infrastructure stack for security, Provos said, using cryptographical signatures to ensure that no unauthorized changes can be made without detection. This starts from low-level functionality such as the BIOS, and includes all key components of the boot process including the bootloader, kernel and the base operating system. All of these are controlled, built and hardened by Google.

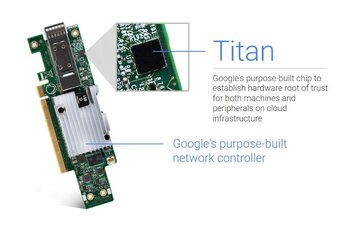

The architecture ensures that servers will only be assigned an identity if all components of the boot process can be validated. According to Provos, the root of trust for the boot chain could, depending on the servers, be contained either in a lockable firmware chip, a microcontroller running security code written by Google, or the Titan security chip which Google unveiled last month.

Details about the Titan remain sparse: it is a custom security chip designed to protect against tampering at the BIOS level, and to help identify and authenticate the hardware and services running within Google. Provos said Titan is currently found on servers as well as peripherals.

“We can provision unique identities to each server in the data center that can be tied back to the hardware root, as well as the software with which the machine was booted. The machine identity is then used to authenticate API calls to and from low-level management services on the machine,” Provos explained.

Within the Google ecosystem, binaries are cryptographically signed, and all data is encrypted before it is written to disk. In addition, cryptographic keys are stored in RAM only for the moment they are needed. Remote procedure calls (RPCs) and other communications between data centers are automatically encrypted by default.

Provos noted that security is enforced at the application layer, and that all services are designed to mutually prove their identity to each other. He said: “We don’t rely on internal segmentation or firewalls as our main security mechanisms.”

Fighting off DDoS

Denial of service (DoS) and distributed denial of service (DDoS) attacks mean that security practices cannot be limited to simply keeping hackers out, but must also ensure that legitimate users can access particular cloud services or content. And Google is arguably at the forefront of the fight against DoS attacks.

The scale of the infrastructure at Google allows it to simply absorb less substantial DoS attempts. Google has also established multiple layers of defenses to further reduce the chance of these attacks succeeding, such as engineering the Google Front End (GFE) engine to absorb traditional strategies like Syn floods, IP fragment floods and port exhaustion attacks.

In addition, a central DoS service analyzes statistics about incoming traffic as it passes through several layers of hardware and software load balancers, Provos said, and will dynamically configure them to drop or throttle traffic associated with a DoS attack. This is repeated at the GFE level, which can offer more detailed application-level statistics about ongoing attacks.

Of course, any DoS defenses must be backed by adequate network and server capacity. The former was the reason why nine years ago, Google became the first non-telecommunications company to lay a submarine fiber cable, Provos noted.

The company continues to work to increase the bandwidth within its data centers: “In our latest generation Jupiter network, we have increased the capacity of a single data center network more than 100 times. Our Jupiter fabric can deliver more than one Petabit per second of total bisection bandwidth. The capacity will be enough for 100,000 servers to talk to each other at 10Gbps each [and to] mitigate DoS attacks.”

A slice of the pie

Google is making a serious effort to convince cloud users to make the switch to its cloud, which it says is built on the same technologies that power various Google services. And there is no question that cloud juggernaut Amazon Web Services (AWS) is its main competitor. During the conference, Google executives took jabs at AWS by highlighting how users are forced to pay upfront to get better prices, have usage fees rounded up to the hour, and can choose from a comparatively limited range of compute instances.

Google Cloud touted its per minute billing, a sustained use discount that offers lower rates for instances that run longer, and custom instance types with the ability to customize just about every aspect of virtual machines.

Some people have suggested Google is not sensitive to the needs of enterprises, and has a penchant for jumping ahead too quickly. Diane Greene, vice president of Google Cloud, denied this: “We are a full-on enterprise company. And that means providing backwards compatibility,” she said.

For now, the battle in Southeast Asia is heating up. And, as we reported last year, Google had hired long-time Amazon Web Services (AWS) executive Rick Harshman to helm Google Cloud in the Asia Pacific region. In Singapore, the second Google data center is going live, and is expected to anchor the launch of Google Cloud service here.