Nvidia has officially begun shipping DGX H100 systems to customers around the world.

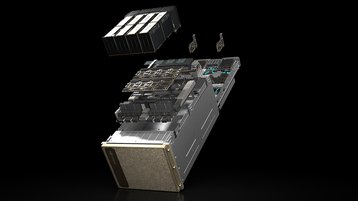

The DGX H100 features eight H100 Tensor Core GPUs connected over NVLink, along with dual Intel Xeon Platinum 8480C processors, 2TB of system memory, and 30 terabytes of NVMe SSD.

Among the early customers detailed by Nvidia includes the Boston Dynamics AI Institute, which will use a DGX H100 to simulate robots.

Startup Scissero will use a DGX H100 for a GPT-powered chatbot for legal services, while the Johns Hopkins University Applied Physics Laboratory will use it to train large language models. The KTH Royal Institute of Technology in Sweden will also use one for research.

Japanese Internet services company CyberAgent will develop smart digital ads and celebrity avatars, and Ecuadorian telco Telconet will build intelligent video analytics. DeepL plans to use 'several' DGX H100 for its translation platform.

We spoke to Nvidia's head of AI and data centers, as well as AMD, Intel, Google, Microsoft, Google, AWS, and more in the latest issue of DCD Magazine about what generative AI means for compute. Read it for free today.