The global AI market is set to contribute as much as $15.7 trillion to the world economy by the end of the decade. Leaders across industries are betting on its potential to streamline operations, improve productivity, generate revenue, and support innovation.

However, AI is an energy-intensive technology. According to one estimate, the servers that power AI may use as much electricity over the course of a year as a country the size of Sweden or Argentina. The high-performance computing (HPC) environments required to run AI workloads use 300 to 1,000 times more power than traditional workloads, producing far more heat as a result. That means data centers must consume even more energy to properly cool servers so they operate reliably.

Unlocking AI’s full potential may require organizations to make significant concessions on their ESG goals unless the industry drastically reduces AI’s environmental footprint. This means all data center operators - including both in-house teams and third-party partners - must adopt innovative data center cooling capabilities that can simultaneously improve energy efficiency and reduce carbon emissions.

Three strategies to improve cooling efficiency while maintaining high performance

The need for HPC capabilities is not unique to AI. Grid computing, clustering, and large-scale data processing are among the technologies that depend on HPC to facilitate distributed workloads, coordinate complex tasks, and handle immense amounts of data across multiple systems.

However, with the rapid rise of AI, the demand for HPC resources has surged, intensifying the need for advanced infrastructure, energy efficiency, and sustainable solutions to manage the associated power and cooling requirements. In particular, the large graphics processing units (GPUs) required to support complex AI models and deep learning algorithms generate more heat than traditional CPUs, creating new challenges for data center design and operation. Deployments need to support storage and network solutions that may not require liquid solutions.

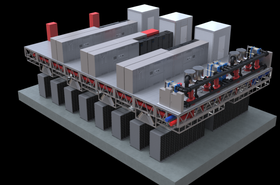

These challenges require cutting-edge cooling technologies and energy-efficient systems to ensure optimal performance without compromising sustainability goals. For best results, data centers will need to adopt a combination of air and liquid cooling solutions that can flex to meet the specific requirements of each deployment:

Modernized CRAC technology

Traditional computer room air conditioning (CRAC) systems rely on centralized air distribution, where cool air is blown through the entire room from a limited number of points. This method works well for lower-density setups where heat dissipation is relatively uniform across servers, but it struggles to meet the needs of high-density environments filled with heat-intensive GPU-based systems.

In contrast, modern CRAC systems use sophisticated cooling technologies like variable speed fans and economizers to dynamically control cooling throughout the data center. This method targets hot spots, adjusting airflow in real time to reflect actual environmental conditions. As a result, modern CRAC systems are more energy efficient than their predecessors, providing a scalable solution designed for fluctuating workloads.

Fungible air delivery

Data centers that use fungible air delivery systems can customize cooling resources at the room, row, and rack level. These tailored solutions support dual performance and sustainability requirements.

- At the room level: Modular cooling can be strategically placed to direct air where it's needed most. For example, airflow might be adjusted when the room layout changes or new equipment is installed. Techniques like hot and cold aisle containment — lining up server racks in alternating rows with cold air flowing in on one side and out on the other — also help to prevent air mixing, allowing for more efficient cooling.

- At the row level: Dedicated cooling units are integrated into each row of cabinets to efficiently deliver more air to high-density areas. These units can be repositioned or adjusted as row configurations change.

- At the rack level: Direct-to-rack cooling provides more targeted and efficient air delivery. While incorporating rack-level units can increase costs and complexity, it significantly reduces the temperature in high-heat areas.

Liquid cooling technology

The significant thermal outputs of advanced AI systems are difficult to cool with air circulation alone. Liquid cooling works in tandem with air cooling methods to offset the heat surrounding high-density racks, preventing power and heat-related failures. The industry sees 30-35kw as the typical cross-over point where liquid makes more sense, and can be more TCO effective. However, in many DC environments, like Flexential’s, higher-density air-cooled racks can be supported.

Now with the advent of extreme-density, and pre-integrated, racks that support upwards of 300kW — a number that keeps climbing — the need for advanced cooling technologies continues to grow.

Liquid cooling involves circulating a cooling fluid, typically water or a water-based solution, through a closed-loop system to absorb and dissipate heat. The water is continuously recycled, delivering a water usage effectiveness (WUE) level of zero. Data centers can also supplement this approach with other liquid cooling techniques to more efficiently support AI’s rapid processing performance requirements.

For example, direct-to-chip cooling puts cold plates directly in contact with GPUs and CPUs, while immersion cooling involves submerging entire server components in a non-conductive cooling fluid. Various technologies exist to dissipate heat, such as liquid-to-liquid (part of a closed-loop system) or liquid-to-air. By using a mix of these technologies, data centers can flex to maximize efficiency for any type of deployment.

Additionally, cooling distribution units (CDUs) support various hardware configurations and may require upstream liquid, or some may be self-contained to support retrofit solutions. As AI deployments grow to support edge inference, these different deployment architectures are important to support.

Aligning AI deployments and ESG initiatives is possible

By moving beyond one-size-fits-all cooling solutions, data centers can more effectively balance the technical requirements of AI deployments with sustainability priorities. This approach offers flexibility to cool the hottest areas of the data center no matter the server configuration, achieving optimal temperatures throughout the facility while maximizing energy efficiency.

With innovative cooling techniques and a multifaceted approach, data centers can continue to deliver the reliability and performance needed for today's demanding AI workloads — and set the stage for widespread AI adoption grounded in ESG best practices.