Software-Defined Networking (SDN) excited the IT world four years ago with the promise that it would revolutionize how networks were built. The new utopia promised an end to stodgy, inflexible protocols and device-by-device configuration. These would be replaced with centralized control, automated provisioning, and dynamic policies dictated by applications. For most applications, standard Layer-2 and Layer-3 (L2/L3) protocols work great; they are mature, reliable, and most importantly, they provide interoperability. However, there are some applications where it makes sense to apply SDN to engineer network traffic, and beyond its functionality, SDN is proven to scale better than the traditional methods.

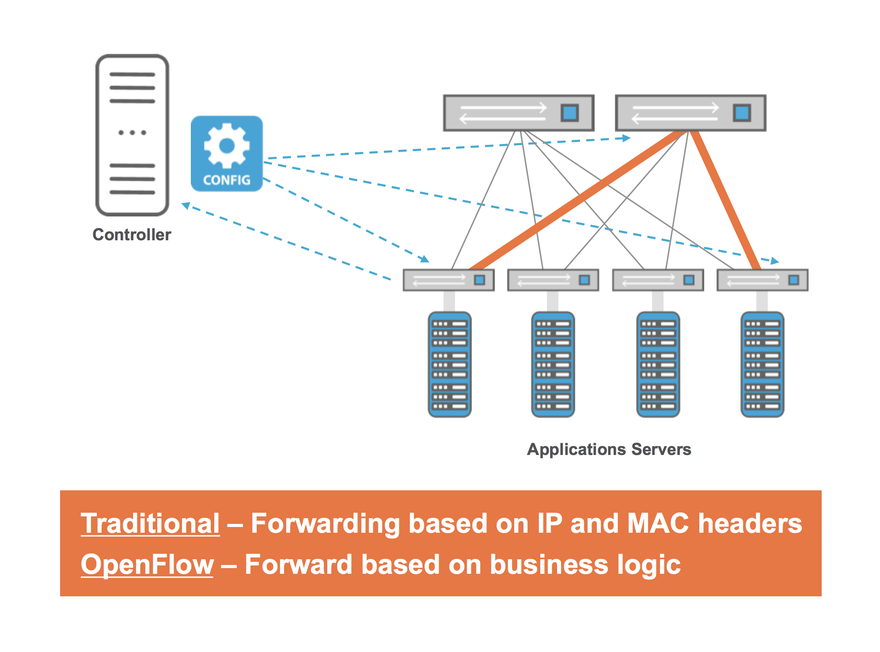

Ideally, it would be best to be able to implement SDN and L2/L3 networking in concert. CrossFlow integrates SDN into a traditional L2/L3 infrastructure. In order for an SDN framework to work seamlessly with a traditional infrastructure, CrossFlow provides interfaces to traditional L2/L3 protocols (BGP, OPSF, etc.) while exposing the forwarding information base (FIB) to the SDN application. To a traditional network switch, CrossFlow-enabled hardware looks like a traditional endpoint, so it would terminate the routing sessions, learn routes, and store them in its forwarding tables. But to an SDN network, the CrossFlow-enabled box would look like an OpenFlow switch where it can influence the FIB based on custom logic derived from a business decision, for example.

Let’s look at three use cases where CrossFlow allows users to layer SDN on top of L2/L3 networking.

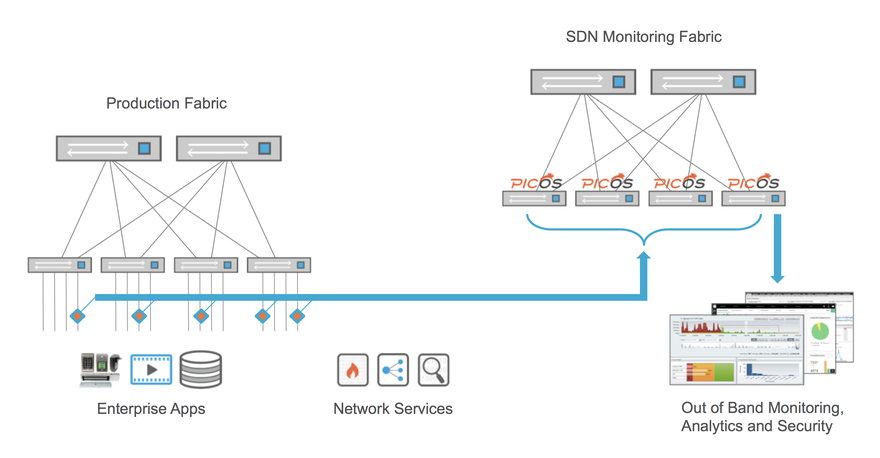

Network packet brokers

A network packet broker enables you to tap traffic and redirect a filtered set of packets to monitoring tools such as packet analysis or security applications. Network packet broker specialists like Gigamon have boxes that are specialized to do deep packet inspection on traffic and feed it to a central location where they host analytic tools. These boxes are expensive to the point that they are comparable to the cost of the forwarding box. One way to scale the tapping points without the linear cost is to use white boxes to offload some functionalities such as filtering, sampling, unwanted header popping, packet counters, or mirroring, among others. SDN and OpenFlow reduce the cost of scaling and reduce the complexity of deploying network packet brokers.

SDN allows you to have a centralized control plane, which allows you to manage scale and grow the network layer via a network policy.

You now have the flexibility to choose between hardware vendors or ASIC chip architectures to accelerate deployments without sacrificing functionality. You can fine-tune your filter application depending on the use case you want to enable. For example, if you want to use a box to scale a number of flows, then one of the newer generation chips with bigger tables would give you the same functionality with a single controller that can control all of these edge traffic-monitoring devices – you don’t have to control them individually. With OpenFlow, you no longer need to manage the configuration on each device.

Elephant and mice flows

One of the applications that can use the analytics generated from the above example is to engineer traffic on the network. Elephant and mice flows are good examples that can benefit from this traffic engineering. You have latency-sensitive traffic like audio and video (mice flows), and store-and-forward or buffered traffic like storage (elephant flows). When you put these different kinds of traffic on the same switch, the switch has to assume different characteristics or personalities, depending on the traffic it’s processing.

For example, video traffic performs well when there’s very low latency and very low jitter in your box. For storage traffic to perform well, you have the largest MTUs that the box can transport. These requirements mandate contrasting buffer characteristics in real-time. The only way to engineer this traffic is to have some logic that pre-determines the paths for latency-sensitive traffic and make sure that they don’t share any links with the storage traffic. This segregation of the topology requires an SDN controller that has a complete view of the network and links. The SDN controller can use a path computation element (PCE) to determine the best path with the characteristics required.

Network convergence

Another interesting application that uses analytics is around network convergence. Typically, network convergence is assumed to be about managing forwarding tables in your network infrastructure to recover from link failures. However, there are many factors that must be considered when dealing with traffic that require specific characteristics. The above example of voice, video and storage traffic through the switch is a good starting point. Each traffic type may require re-routing, buffer modification, workload consolidation and so on that may cause network congestion in different aspects.

Link failures are one of the (simplest) reasons to drive network convergence. Similarly, buffer congestion or workload movement could make the network unusable if a proper policy is not used to deal with the traffic requirements. In a traditional network, this results in development of a yet another protocol that will monitor the network topology and the characteristics in real time and provide a network-centric solution to resolve the convergence. This leads to a proprietary solution for a simple problem. Besides the solution cannot be standardized across all hardware.

OpenFlow takes the route of simplifying and standardizing the data plane such that even a custom application can be run on the network without interop issues. The key to this is to understand the components involved in the convergence vector such as links, bandwidth utilization, buffer availability, workload discovery and more if needed. Once this vector is understood, a simple algorithm such as Application Layer Traffic Optimization (ALTO) or a more complex or custom logic can address the convergence SLAs in a predictable manner.

Proprietary hardware is known for these capabilities, but an OpenFlow controller is capable of directly influencing the relevant components involved – the proprietary devices don’t open up that interface to be able to influence a forwarding entry. Proprietary network devices might present this feature through a BGP knob or an OSPF knob, but if you really want to integrate the convergence based on logic other than a network protocol, you don’t have a way of doing that. SDN allows you to do that.

CrossFlow is not about forklifting the traditional network using SDN; it’s about using whatever is already existing and having a choice to use policy-based networking when it’s useful. For example, every network stack has an ARP responder in it, so you don’t need to build that function on top of an OpenFlow controller to enable your application – you could use that part of the L2/L3 stack on the switch and do it locally rather than doing a completely centralized model. There could be benefits to that because when you’re looking at the scale-out model, you’re creating a congestion point in the control channel toward the controller, which might not be the optimum solution. You could use the local capabilities of the box rather than having to build everything on top of the SDN controller.

While is not a be-all, end-all solution for every networking problem, SDN is a better choice certain cases. CrossFlow allows users to integrate SDN and L2/L3 networking on the same switches so that SDN capabilities are available when needed.

Sudhir Modali leads the SDN strategy at Pica8.