Servers have been installed in 19in racks since before data centers existed. The technology to cool the air in buildings has been developed to a high standard, and electrical power distribution is a very mature technology, which has made only incremental changes in the last few years.

Given all that, you might be forgiven for thinking that the design of data center hardware is approaching a standard, and future changes will be mere tweaks. However you would be wrong. There are plenty of radical approaches to racks, cooling and power distribution around. Some have been proclaimed for years, others have come from out of the blue.

Not all of them will gain traction.

This feature appeared in our special colo design supplement. Subscribe for free today.

Rack revolution

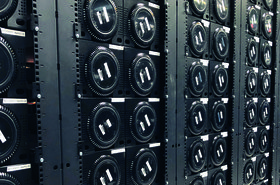

For someone used to racks in rows, a visit to one of French provider OVH’s cloud data centers is a disorienting experience. OVH wants to be the major European cloud provider - it combines VMware and OpenStack based platform as a service (PaaS) public clouds and hosts corporate private clouds for customers - but goes against the standard fare of the industry. Instead of standing vertically, OVH’s racks lie horizontally.

Close to its French facilities in Roubaix, it has a small factory, which makes its own rack frames. These “Hori-Racks” are the same size as normal 48U racks, but configured completely differently. Inside them, three small 16U racks sit side-by-side. The factory pre-populates these “Hori-Racks” with servers before they are shipped to OVH facilities, mostly in France, but some in farther corners of the world.

The reason for the horizontal approach seems to be speed of manufacturing and ease of distribution: “It’s quicker to deploy them in the data centers with a forklift, and stack them” said OVH chief industrial officer François Stérin.

Racks are built and tested speedily, with a just-in-time approach that minimizes inventory. Three staff can work side by side to load and test the hardware, and then a forklift, truck or trailer (or ship) can move the rack to its destination, in Gravelines, Strasbourg or Singapore. In the building, up to three racks are stacked on top of each other, giving the same density of servers as conventional racking.

OVH can indulge its passion for exotic hardware because it sells services at the PaaS level - it doesn’t colocate customers’ hardware. Also, it takes a novel approach to cooling (see later) which allows it flexibility with the building itself.

You don’t have to go as far as OVH in changing racks: there are plenty of other people suggesting new ways to build them. The most obvious example is the open source hardware groups like Open Compute Project (OCP), started by Facebook, and Open19, started by LinkedIn.

Both operate as “buyers’ clubs,” sharing custom designs for hardware so that multiple customers can get the benefits of a big order for those tweaks - generally aimed at simplifying the kit, and reducing the amount of wasted material and energy in the end product. Traditional racks, and IT hardware, it turns out, include a lot of wasted material, from unnecessary power equipment down to manufacturers’ brand labels.

OCP was launched by Facebook in 2011 to develop and share standardized OEM designs for racks and other hardware. The group’s raison d’etre was that webscale companies could demand their own customized hardware designs from suppliers, because of their large scale. By sharing these designs more widely it would be possible to spread the benefits to smaller players - while gathering suggestions for improved designs from them.

While the OCP’s founders all addressed giant webscale players, there are signs that the ideas have spread further, into colocation spaces. Here, the provider doesn’t have ultimate control of the hardware in the space, so it can’t deliver the monolithic data center architectures envisaged by OCP, but some customers are picking up on the idea, and the OCP has issued facility guidelines on making a place “OCP ready,” meaning that OCP racks and OCP hardware are welcome and supported there.

OCP put forward a new design of racks, which packs more hardware into the same space as a conventional rack. By using more of the space within the rack, it allows equipment that is 21in wide, instead of the usual 19in. It also allows deeper kit, with an OpenU measuring 48mm, compared to a regular rack unit of 44.5mm.

The design also uses DC power, distributed via a bus bar on the back of the rack. This approach appeals to monolithic webscale users such as Facebook, as it allows the data center to do away with the multiple power supplies within the IT kit. Instead of distributing AC power, and rectifying it to DC in each individual box, it’s done in one place.

Open Rack version 1 used 12V power, and a 48V power was also allowed in version 2, which also added the option for lithium-ion batteries within the racks, as a kind of distributed UPS system.

That was too radical for some, and in 2016 LinkedIn launched the Open19 group, which proposed mass market simplifications without breaking the 19in paradigm. Open19 racks are divided into cages with a simplified power distribution system, similar to the proprietary blade servers offered by hardware vendors. The foundation also shared specifications for network switches developed by LinkedIn.

“We looked at the 21 inch Open Rack, and we said no, this needs to fit any 19 inch rack,” said Open19 founder Yuval Bachar,launching the group at DCD Webscale in 2016. ”We wanted to create a cost reduction of 50 percent in common elements like the PDU, power and the rack itself. We actually achieved 65 percent.”

Just as it launched Open19, LinkedIn was bought by Microsoft, which was a major backer of OCP, and a big user of OCP-standard equipment in data centers for its Azure cloud. Microsoft offered webscale technology to OCP, such as in-rack lithium-ion batteries to provide local power continuity for the IT kit, potentially replacing the facility UPS.

While the LinkedIn purchase went through, OCP and Open19 have continued in parallel, with OCP catering for giant data centers, and Open19 aiming at smaller facilities used by smaller companies that nevertheless, like LinkedIn, are running their own data centers. Latterly, Open19 has been focusing on Edge deployments.

In July 2019, however, LinkedIn announced it no longer planned to run its own data centers, and would move all its workloads over to the public cloud - obviously enough using its parent Microsoft’s Azure cloud.

Also this year, LinkedIn announced that its Open19 technology specifications will be contributed to OCP. It’s possible that OCP and Open19 specifications may merge in future, but too early to say. Even if LinkedIn no longer needs it, the group has more than 25 other members.

For webscale facilities, OCP is pushing ahead with a third version of the OCP Rack, backed by Microsoft and Facebook, and it seems to be driven by increasing power density demanded by AI and machine learning.

“At the component level, we are seeing power densities of a variety of processors and networking chips that will be beyond the ability of air to cool in the near future,” said the Facebook blog announcing OCP Rack v3. “At the system level, AI hardware solutions will continue to drive higher power densities.”

The new version aims to standardize manifolds for circulating liquid coolant within the racks, and also heat exchangers for cabinet doors, and includes the option of fully immersed systems. It’s not clear what the detailed specifications are but they will emerge from OCP’s Rack and Power project, and its Advanced Cooling Solutions sub-project.

Liquid cooling

For the last couple of decades, liquid cooling has shown huge promise. Liquid has a much higher ability to capture and remove heat than air, but moving liquid through the hardware in a rack is a big change to existing practices. So liquid has remained resolutely on the list of exotic technologies which aren’t actually worth the extra cost and headache.

Below 20kW per rack, air does the job cost effectively, and there is no need to bring liquid into the rack. Power densities are still generally well below that figure, so most data centers can easily be built without liquid cooling. However, there are two possibilities pushing liquid cooling to the fore.

Firstly GPUs and other specialized hardware for AI and the like could drive the power density upwards.

Secondly, for those who implement liquid cooling there are other benefits. Once it’s implemented, liquid cooling opens up a lot of flexibility for the facility. Air-cooled racks are part of a system which necessarily includes air conditioning, air handling and containment - up to and including the walls and floors of the whole building.

Liquid cooled racks just need an umbilical connection, and can be stood separately, on a cement floor, in a carpeted space, or in a small cabinet.

This can be difficult to present in a retail colocation space, because it affects the IT equipment. So unless the end customer specifically needs liquid cooling, it’s not going to happen there. But it does play well to the increasing flexibility of data centers, where the provider has control of the hardware, and there isn’t a building-level containment system.

Small Edge facilities are often micro data centers without the resources of a full data center behind them. Other data centers are being built inside repurposed buildings, often in small increments. A liquid cooling system can fit these requirements well.

Early mainframes were water-cooled but in the modern era, liquid cooling has emerged from various sources.

Some firms, including Asperitas, Submer and GRC, completely immerse the racks in a tub of inert liquid. No energy is required for cooling, but maintenance is complicated because the rack design is completely changed, and servers and switches must be lifted from the tub and drained before any hardware modifications. Iceotope, which now has backing from Schneider, has a system which immerses components in trays within the racks.

Others offer direct circulation, piping liquid through the heatsinks of energy-hungry components. This was pioneered by gamers who wanted to overclock their computers, and developed rigs to remove the extra heat produced. Firms like CoolIT developed circulation systems for business equipment in racks, but they have been niche products aimed particularly at supercomputers. They require changes to the racks, and introduce a circulatory system, which flows cool water into the racks and warm water out.

In Northern France, OVH has its own take on liquid cooling, as well as the racks we saw earlier. It builds data centers in repurposed factories which formerly made tapestry, soft drinks and medical supplies, and liquid cooling allows it to treat these facilities as shells: building in one go, with a raised floor, and building-level air conditioning OVH stacks in racks as needed, connecting them to a liquid cooling system.

“Our model is that we buy existing buildings and retrofit them to use our technology,” OVH chief industrial officer François Stérin explained. “We can do that because we make our own racks with a water cooling system that is quite autonomous - and we also use a heat exchanger door on the back of the racks. This makes our racks pretty agnostic to the rest of the building.”

The flexibility helps with changing markets, said Stérin: “To test the market we don't need to build a giant 100MW mega data center, we can start with like a 1MW data center and see how the market works for us.”

With a lot of identical racks, and a factory at its disposal, OVH has pushed the technology forward, showing DCD multiple versions of the concept. OVH technicians demonstrated a maintenance procedure on the current version of the cooling technology.

It seemed a little like surgery. First the tubes carrying the cooling fluid were sealed with surgical clamps, then the board was disconnected from the pipes and removed. Then a hard drive was replaced with an SSD.

Already this design is being superseded by another one, which uses bayonet joints, so the board can be unplugged without the need to clamp the tubes.

Less radical liquid cooling systems are available, including heat exchangers in the cabinet doors, which can be effective at levels where air cooling would still be a viable option.

OVH combines this with its circulation system. The direct liquid cooling removes 70 percent of the heat from its IT equipment, but the other 30 percent still has to go somewhere - and is removed by rear-door heat exchangers. This is a closed loop system, with the heat rejected externally.

Liquid cooling isn’t necessary for a system designed to be installed in a shell. It’s now quite common to see data centers where a contained row has been constructed on its own, on a cement floor, connected to a conventional cooling system. Mainstream vendors such as Vertiv offer modular builds that can be placed on cement floors, while others offer their own take.

One interesting vendor is Giga Data Centers, which claims its WindChill enclosure can achieve a PUE of 1.15, even when it is being implemented in small increments within a shell, such as the Mooresville, North Carolina building where it recently opened a facility.

The approach here is to build an air cooling system alongside the rack, so a large volume of air can be drawn in and circulated.

Don’t be lulled into a false sense of security. The design of hardware is changing just as fast as ever, and those building colocation data centers need to keep track of developments.