The Tokyo Institute of Technology (Tokyo Tech or TiTech) is building a new energy-efficient supercomputer which will combine machine learning with number-crunching, according to an announcement (Japanese).

The Tsubame3.0 machine should be in operation this summer, providing at least 47.2 petaflops of 16-bit half-precision calculation, said the announcement. Working in conjunction with the existing Tsubame2.5 system, it will offer 64.3 petaflops, keeping Tokyo Tech the largest supercomputing center in Japan, a title established in 2010 by the earlier Tsubame generation.

AI demands

Many supercomputers are built for 64-bit calculations, but in the AI and big data fields 16-bit half-precision processing can be more useful, says Tokyo Tech.

The government funded Tsubame supercomputer series will use 2.180 Nvidia Pascal GPUs, following an Nvidia connection which saw Tesla chips in Tsubame1.2, Fermi in v2.0and Kepler in v2.5.

The system will combine machine learning directly with the simulation processes, so that it can refine things like weather models by comparing the result with reality.

“In the area of supercomputing, AI is rapidly becoming an important application,” said Ian Buck, vice president of NVIDIA’s accelerated computing business. ”Tsubame3.0 is expected to change the lives of people in various fields such as medical, energy and transportation.”

In double precision calculations,the machine’s theoretical performane is 12.15 petaflops

The system has 1.08PB in-node nVME SSD storage systems along with a 15PB Lustre parallel file system from DDN..

The system uses outdoor cooling towers to provide chilled water at the temperature of the outside air and reduce energy consumption giving a promised PUE (power usage effectiveness) of 1.033.

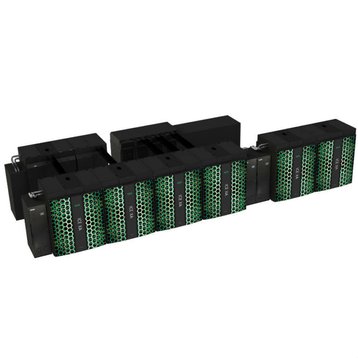

The system has 540 SGI ICE compute nodes,each with two Intel E5-2680 v4 Xeon processors, and four Nvidia Tesla P100 GPUs.