On Monday, Intel announced it is commencing production on its third generation Xeon D processor series. Think of the D-1500 as Broadwell microarchitecture at the edge of the network — the 15nm, DDR4-capable version of its hyperscale server processor, with the payoff hopefully being higher throughput levels for service providers investing in SDN.

It looks like Intel’s effort to render its own Atom processors obsolete.

“We’ve been able to keep the density targets between 20 and 45 watts,” announced Raejeanne Skillern, Intel’s general manager for cloud service provider business platforms, during an Intel press conference, “that enables this SoC [System-on-a-Chip] — despite having Xeon-class performance — to still operate a very low power level, to fit into the dense form factors you find at either the data center edge or the network edge.”

It’s here at the edge where network traffic is heaviest. You’ll find some Intel chips there now, but they’re Atom C units. While Atoms are inexpensive and consume low amounts of power, they’re less sophisticated, less competitive against ARM — the rising force in the data center — and they provide low revenue margins.

Atom also provided the one key virtue data centers need, particularly those belonging to service providers: the ability to rapidly scale up. Need more compute power? Add more Atoms.

To successfully kick Atom to the side and make way for Xeon D, Intel needs to face its biggest data center challenge yet — a challenge that literally came from out of the blue.

Facebook moves the goalpost

Facebook is arguably the largest single customer for any hyperscale server maker. In the summer of 2012, in what history will record as a brilliant move, Facebook not only dictated its specifications for the servers it would purchase in a public forum, but encouraged other service providers to claim those specs for themselves.

The Open Compute specs dictate the power envelope, network capacity, and tolerance levels for the servers Facebook prefers. Now server makers are forced to follow their customers’ lead, and Intel has to build processors that stay within the envelope.

Intel could still accomplish this with cheap processors. But “cheap” doesn’t provide money for the coffers, and in turn, fails to keep shareholders appeased. Its other alternative is to innovate: to compress more compute power, rather than just more hot transistors, into lesser space. It wants to push its SoCs where they’ve never gone before.

It won’t be an easy pitch to make. Open Compute defines “system on a chip” differently than Intel: as a microserver that fits dynamic memory and local storage on a card roughly the size of a PCIe card, fitting in a series of slots in a baseboard. It’s a definition Facebook devised along with AppliedMicro Circuits Corp., a leading customer of ARM.

You think Intel keeping up with the demands of Moore’s Law was tough?

Raejeanne Skillern puts her best face forward. “We see this as an amazing opportunity to drive the SoC form factor density to the edge, as well as the network edge, without sacrificing any of the density that you would get from the current infrastructure footprint that’s already out there,” she told reporters. Xeon D-1500, she said, will be positioned “for those applications at the data center’s edge that need optimized infrastructure, performance per watt, and for dynamic Web or more storage or networking [-oriented] applications.”

The upside potential

Until very recently, x86 processors were described as being adaptable to almost any situation. Their distinctions could be plotted on a linear scale. On one side was high performance; on the other, low power consumption. In the middle was a “workhorse” processor that was often said to be the best value for the money.

Competition can awaken you to new realities quite quickly.

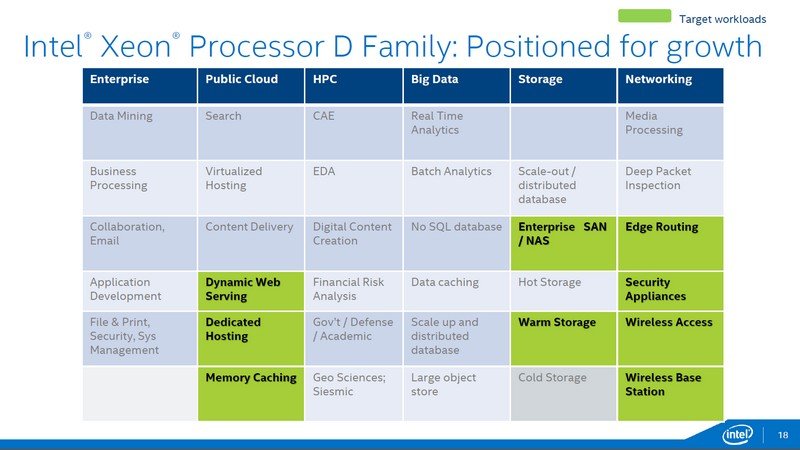

Nidhi Chappell, Intel’s Xeon D product line manager, has developed a new, non-linear chart depicting a limited number of target workload classes for which she feels Xeon D would be best suited. Enterprise applications, HPC processes, and big data applications are not targeted at all — these are classes Intel feels are best suited for Xeon E5 v3, which Intel introduced last September. Hosting and caching memory (memcached) for public cloud services, providing warm storage for shared SANs, and edge routing are all in Xeon D’s target zone.

“We see a lot of lightweight, hyperscale workloads,” said Chappell. “These are the workloads that actually care about density, about a lot of users… in a rack. These are the workloads that will probably gravitate towards the Xeon D product family. The rest of the workloads in the enterprise — the public cloud space, HPC, and big data — are probably going to look for higher performance, and we expect they will stick with our E5 or E7 product line.”

Chappell conceded that in the storage and networking market segments, Intel has low market segment shares. Warm storage, which Facebook has defined as stored objects (usually photos and videos) that are not being accessed every moment, but still require rapid availability — much more so than anything moved to a cold storage archive.

But the biggest potential upside for Intel with Xeon D, she says, is in the networking space, where the move to SDN is fully under way.

“As network providers go into network transformation, the edge routers, the security appliances, the wireless access points, the base stations — they will all need to get more intelligent. This is where they would really prefer to have a really smart, low TDP [thermal design point] device with Xeon-class rack capabilities [and] 10-gig Ethernet.”

Yet there’s one loftier goal not on Chappell’s list that Intel perceives as achievable for Xeon D. If it seemed like a stretch at first that a processor first intended for netbooks could be extended to the hyperscale data center - imagine a processor geared for SDN-endowed servers being extended to embedded systems.

Raejeanne Skillern revealed she still perceives Xeon D in a linear sense: as an end-to-end product line that links two ends some folks might never have considered needing linking. IoT appliances that would normally require low-power embedded systems processors, are one example. And at one point she said, “Use cases like manufacturing floor robotics… are going to need the performance of Xeon brought into an IoT edge device.”

Tale of the tape

This release brings two third-generation Xeon D models: the D-1520 with 4 cores clocked at 2.2 GHz, and the D-1540 with 8 cores clocked at 2.0 GHz. Both operate in 45 W power envelopes, and both have maximum turbo frequencies of 2.5 GHz across all cores, with one core capable of being selected for 2.6 GHz.

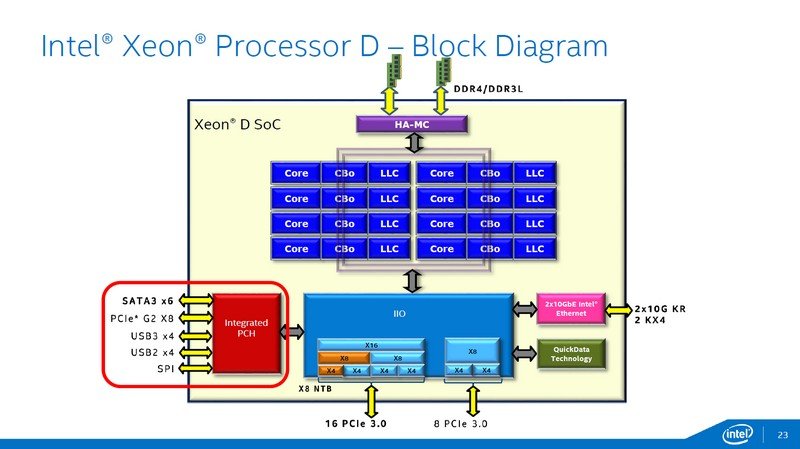

One of the highlights of Intel’s Broadwell architecture is twin memory controllers. In the Xeon D configuration, they manage twin ring interconnects among up to eight cores, but no more than eight. The ring system replaces the crossbar structure that was prevalent in Atom C and previous Xeon models. As with other Broadwell SoCs, Xeon D will support DDR4 at 2133 mega-transfers per second (MT/sec), and DDR3L up to 1600 MT/sec. Twin controllers enable twin channels with up to 2 DIMMs per channel.

If you do the math, that means up to 128 GB of maximum DRAM using RDIMM modules, or up to 64 GB using the smaller UDIMMs or SODIMMs.

Last-level cache (LLC, or L3) is made up of up to 12 MB sliced eight ways (1.5 MB per slice) but are shared among all cores. The partitioning of LLC enables Intel’s thread monitoring technology, introduced with Xeon E5 v3, that can identify threads whose behavior conflicts with other threads and slows down the entire system. In addition, Xeon D includes cache allocation capability, enabling the separation of those “noisy neighbors” into isolated blocks, reducing slowdowns. The L1 cache is 32 KB for data, 32 KB for instructions; while each core contains its own 256 KB unified L2 cache, connected through the ring interconnect to the shared LLC.

The PCIe I/O subsystem finally evolves to Gen3, enabling 24 lanes that may be subdivided among PCIe x16, x8, or x4 ports as OEMs require. Two 10-gigabit Ethernet ports allow for high bandwidth. Rounding out the I/O is an integrated PCH controller in a separate chip, managing up to six SATA3 ports, up to eight PCIe Gen2 x8 lanes, up to four USB3 and USB2 ports each, in addition to the one standard Serial Peripheral Interface port.

In tests conducted in February, a pre-production 8-core Xeon D1540 clocked at 1.9 GHz delivered about 30 percent better performance per watt in Web server benchmarks than an 8-core Atom C2750 clocked at 2.4 GHz. Technicians expect the production model D1540 at 2.0 GHz to achieve 74 percent better performance/W.

Intel claims that in the ’SPECint_rate_base2006’ integer performance benchmark, at the low end of the CPUs’ respective performance ranges, servers endowed with both pre-production Xeon D models delivered 510 percent the performance of a two-socket server equipped with Intel’s just-released Xeon E5-2603 v3. That’s to say, when both classes performed as slow as they could, the E5 was over five times slower. When both classes are performing at peak levels, both Xeon Ds were still about 35 percent faster.

This also shows the wildly varying performance range of the E5 in sustained integer math operations, which is not to its benefit. But it does highlight the performance consistency of Xeon D, and SDN applications do require consistency.

Last September, when Xeon E5 v3 was released, Intel spoke about the prospects for E5 in network data centers, including at the network edge where all the routing takes place. Now that Xeon D has been boosted with Broadwell architecture, it is Xeon D that’s being touted as the CPU for the edge. It all demonstrates the rapidly shifting strategies of CPU makers in a newly competitive landscape, where the adversary is no longer an x86 maker but a firm helping other manufacturers to kick x86 out of the data center altogether.

Intel’s game plan is to kick back: to boot ARM out of its home territory. It’s that IoT long-shot that Intel’s Raejeanne Skillern believes “is really going to open up the door for Xeon D and the Xeon family spanning edge-to-edge from the data center to the end device.”