Traditional data centers have a relatively static computing infrastructure: “X” number of servers, each having a set number of CPUs, and fixed amount of memory. However, workloads, especially in commercial data centers, vary. For example, the sweet spot of data-center operation is having enough resources to cover peak demand, yet not under-utilize those same resources during non-peak conditions.

Currently, data-center operators error on the safe side, spending the money to keep under- or non-utilized servers in the active pool. It is the only way to ensure their customers have adequate service during times of high demand. That kind of insurance can get expensive.

The disaggregated data center

Disaggregation by definition means “separation into components.” Separating data-center equipment, in particular servers, into resource components offers operators flexibility: increasing their chances of covering times of high demand while ensuring optimal utilization.

One method of data-center disaggregation — divorcing software from computing and networking hardware — is already well underway. Doing so moves control of resource utilization in the right direction, but not far enough.

Doing it right, according to experts (here and here), requires replacing traditional server-centric architecture with resource-centric architecture (modular, connected pools of microprocessors, memory, and storage). The modular arrangement allows, among other advantages, statistical multiplexing: which markedly improves resource provisioning and resource utilization.

As good as disaggregation sounds, we must not forget using discrete resource pools means developing interconnections that were previously contained on the server motherboard. Is that even possible? We know that PC-board electrical interconnections are close to being maxed out physically and electronically.

Replace electrons with photons

The science of photonics may have the answer. Wikipedia describes photonics as “a research field whose goal is to use light to perform functions that traditionally fell within the typical domain of electronics such as telecommunications and information processing.”

Photons are already used to disseminate traffic from data center racks to the rest of the digital world, and researchers are finding ways to replace electrical signals all the way to the processor silicon. So, why not use fiber-optic runs to interconnect the resource pools?

Ask the experts

Answering that question was on the mind of members of OSA (The Optical Society)Industry Development Associates and the Center for Integrated Access Networks when the organizations hosted a workshop focused on how photonics may facilitate data-center disaggregation. The workshop and ensuing paper Roadmap Report on Photonics for Disaggregated Data Centers Workshop were sponsored by the US National Science Foundation.

The workshop planners were also hoping to answer the following:

- How can optics enable a migration to disaggregation?

- What does a photonics-enabled disaggregated data center look like?

- What performance metrics/requirements are important for photonics in disaggregated data centers?

To learn more about the workshop, I contacted Dr. Tom Hausken, senior adviser at The Optical Society and lead author of the paper. Hausken mentioned that disaggregation offered improved efficiency and increased capacity, but the benefits are limited by the cost and performance of the photonic interconnections. “Latency requirements, in particular, impose hard limits on distances over which certain resources can be disaggregated,” said Hausken. “The question then becomes how far away can the resources be located?”

Hausken then talked about one of the key takeaways derived from the workshop. “The workshop attendees were optimistic about fiber-optic interconnects replacing electrical cables everywhere except on the rack shelf,” he said. “Optics will out compete electrical interconnects for links that enable disaggregation if optics can hit the metrics of cost, performance, and size.”

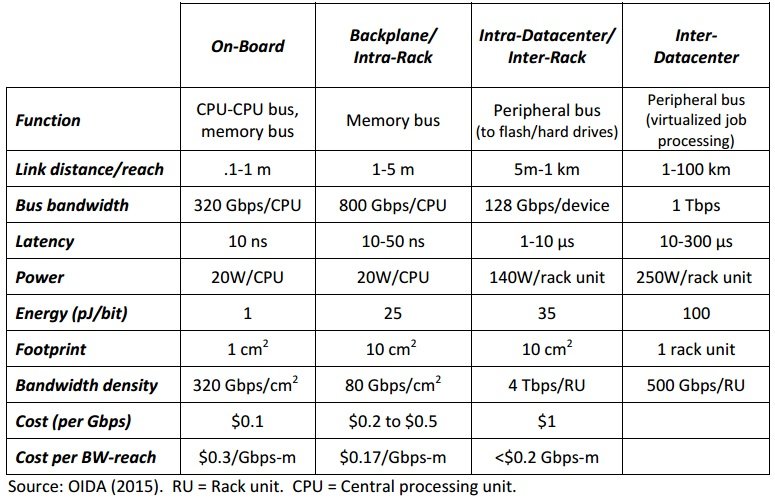

The following graph lists the aforementioned metrics.

The report when on to discuss how the four links — on-board, backplane/intra-rack, intra-data center/inter-rack, and inter-data center — fit into data-center disaggregation:

On-Board (CPU to CPU and memory bus): The board contains the server or servers, and connects to the backplane. On-board bus disaggregation means pools of CPU and/or memory clustered separately on the board or some type of module.

Backplane/Intra-Rack (memory bus): The backplane connects everything in the rack to the Top of Rack switch. “Disaggregating across the backplane would involve pooling one or more boards around a technology such as processors, memory, or storage,” added the paper. “An example would be alternating boards of CPU-only and memory-only hardware.”

Intra-Data Center/Inter-Rack (peripheral bus): This bus involves links between racks throughout the data center. The bus could include Storage Area Networks that are standalone or share rack space. Intel’s Rack Scale Architecture exemplifies this type of disaggregation, where computing hardware is isolated from storage and networking hardware.

Inter-Data Center (peripheral bus): The paper emphasized that this is not the normal inter-data center link, but a link purposed specifically to share a resource among data centers, eliminating the need for each data center to perform the same operation.

Depending on the latency requirements, these interconnections could be considered custom, thus only affordable by larger data-center operations. The report mentioned that “disaggregation of resources between data centers might target $10/Gbps, to the extent that it can meet latency requirements.”

The OIDA paper stated that the graph entries are agnostic when it came to interconnection technology, adding: “If optical interconnects meet the specifications at a similar cost as electrical interconnects, and offer equivalent or better performance; the optical solution will prevail.”

Is disaggregation ready for prime time?

Disaggregation at the server-component level comes across as the next logical evolution in data center technology. However, that granular of a disaggregation only appears feasible if photonic interconnects are used.

I recall Dr. Hausken mentioning during our conversation that silicon photonics, the technology required to get optics onto the board level is not ready, and marketing-types have done their job a little too well.

I then realized I was fast approaching the same conclusion I described in Silicon photonics: still waiting, “Running light signals into your server hardware will disrupt your network — but not just yet.”