Intel Corp. has announced its own distribution for Apache Hadoop, the collection of open-source software for performing data analytics on clustered hardware.

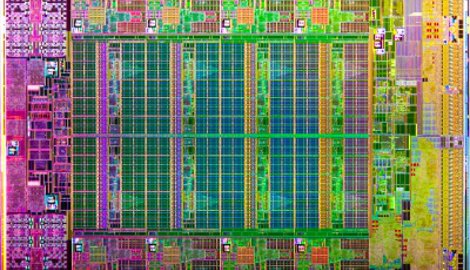

The primary solution is called “Intel Manager for Apache Hadoop,” which the company says it has built “from the silicon up” for high performance and security. The distribution provides complete encryption with support of Intel AES New Instructions in Intel Xeon processors.

Boyd Davis, VP and general manager of Intel's Datacenter Software division, said people and machines were producing a lot of information that could be used to enrich our lives in many ways through analytics. "Intel is committed to contributing its enhancements made to use all of the computing horsepower available to the open-source community to provide the industry with a better foundation from which it can push the limits of innovation and realize the transformational opportunity of big data."

While there are multiple solutions for using clustered commodity hardware to perform analytics on large data sets, the Hadoop framework has emerged as the most popular one. Companies that provide popular distributions of the open-source software include Cloudera, MapR and Hortonworks.

Intel's silicon-based encryption support of the Hadoop Distributed File System means companies can analyze data sets securely, without compromising performance, according to Intel. The analytics performance is further improved by optimizations the chipmaker made for the networking and IO technologies in the Xeon.

Intel has also put some thought in simplifying deployment, configuration and monitoring of analytics clusters for system administrators to help them deploy new applications. This is done through the Intel Active Tuner for Apache Hadoop, which automatically configures the infrastructure for optimal performance, Intel says.

The chipmaker has partnered with a number of vendors to integrate the software into future infrastructure solutions and to work on deploying it in public and private cloud environments. The partners include Cisco, Cray, Dell, Hadapt, Infosys, SAP, SAS, Savvis, Red Hat and many more.

Intel's announcement came on the same day data storage giant EMC unveiled its own Hadoop distribution called “Pivotal HD.” It integrates natively the vendor's Greenplum database technology with the Hadoop framework.