Fifteen years ago, Nvidia made a decision that would eventually place it at the forefront of the artificial intelligence revolution, its chips highly sought after by developers and cloud companies alike.

It decided to change the nature of its graphics processing unit, then primarily used by the video games industry.

“We decided that a GPU, which was a graphics accelerator at the beginning, would become more and more general-purpose,” Nvidia CEO and co-founder Jensen Huang said at the company’s annual GPU Technology Conference in San Jose.

“We did this because we felt that in order to create virtual reality, we had to simulate reality. We had to simulate light, we had to simulate physics, and that simulation has so many different algorithms - from particle physics and fluid dynamics, and ray tracing, the simulation of the physical world requires a general-purpose supercomputing architecture.”

Seeing the light

The impetus to create a general-purpose graphics processing unit (GPGPU) came when Nvidia “saw that there was a pool of academics that were doing something with these gaming GPUs that was truly extraordinary,” Ian Buck, the head of Nvidia’s data center division, told DCD.

“We realized that where graphics was going, even back then, was more and more programmable. In gaming they were basically doing massive simulations of light that had parallels in other verticals - big markets like high performance computing (HPC), where people need to use computing to solve their problems.”

Huang added that, as a result, “it became a common sense computer architecture that is available everywhere. And it couldn’t have come at a better time - when all of these new applications are starting to show up, where AI is writing software and needs a supercomputer to do it, where supercomputing is now the fundamental pillar of science, where people are starting to think about how to create AI and autonomous machines that collaborate with and augment us.

“The timing couldn’t be more perfect.”

Analysts and competitors sometimes question whether Nvidia could have predicted its meteoric rise, or whether it just had the right products at the right time, as the world shifted to embrace workloads like deep learning that are perfect for accelerators. But, whether by chance or foresight, the company has found itself in demand in the data center, in workstations and, of course, in video games.

“We can still operate as one company, build one architecture, build one GPU and have it 85 percent leveraged across all those markets,” Buck said. “And as I’m learning new things and giving [them] to the architecture team, as the gaming guys are learning new things, etc, we’re putting that into one product, one GPU architecture that can solve all those markets.”

With its core GPU architecture finding success in these myriad fields, Nvidia is ready for its next major step - to push further and deeper into simulation, and help create a world it believes will be built out of what we find in those simulations.

The heart of a data center

One of the initiatives the company is working on to illustrate the potential of simulation is Project Clara, an attempt to retroactively turn the 3-5 million medical instruments installed in hospitals around the world into smart systems.

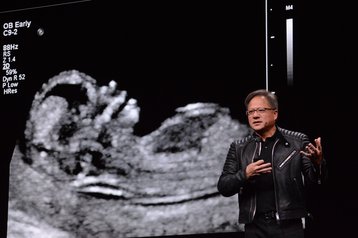

“You take this 15-year old ultrasound machine that’s sitting in a hospital, you stream the ultrasound information into your data center. And your data center, running this stack on top of a GPU server, creates this miracle,” Huang said, as he demonstrated an AI network taking a 2D gray scan of a heart, segmenting out the left ventricle, and presenting it in motion, in 3D and in color.

Nvidia bills Clara as a platform, and has turned to numerous partners, including hospitals and sensor manufacturers, to make it a success. When it comes to software, the company often seeks others, looking to build an ecosystem in which its hardware is key.

Sometimes, Nvidia is happy to take a backseat with software, but there is one area in which the company is clear it hopes to lead - autonomous vehicles. “We’re dedicating ourselves to this. This is the ultimate HPC problem, the ultimate deep learning problem, the ultimate AI problem, and we think we can solve this,” Huang said.

The company operates a fleet of self-driving cars (temporarily grounded after Uber’s fatal crash in March), with each vehicle producing petabytes of data every week, which is then categorized and labeled by more than a thousand ‘trained labelers.’

These vehicles cannot cover enough ground, however, to rival a mere fraction of the number of miles driven by humans in a year. “Simulation is the only path,” Carlos Garcia-Sierra, Nvidia’s business development manager of autonomous vehicles, told DCD.

Digital Reality

This approach has been named Drive Constellation, a system which simulates the world in one server, then outputs it to another that hosts the autonomous driver.

Martijn Tideman, product director of TASS International, which operates its own self-driving simulation systems, told DCD: “You need to model the vehicle, create a digital twin. But that’s not enough, you need a digital twin of the world.”

“This is where Nvidia’s skill can really shine,” Jensen said. “We know how to build virtual reality worlds. In the future there will be thousands of these virtual reality worlds, with thousands of different scenarios running at the same time, and our AI car is navigating itself and testing itself in those worlds, and if any scenarios fail, we can jump in and figure out what is going on.”

Following self-driving vehicles, a similar approach is planned for the robotics industry. “I think logically it makes sense to do Drive Constellation for robotics,” Deepu Talla, VP and GM of Autonomous Machines at Nvidia, told DCD.

It is still early days, but Nvidia’s efforts in this area are known as ‘The Isaac Lab,’ - it aims to develop “the perception, the localization, the mapping and the planning capability that is necessary for robots and autonomous machines to navigate complex worlds,” Huang added.

As Nvidia builds what it calls its ‘Perception Infrastructure’ to allow physical systems to be trained in virtual worlds, there are those trying to push the boundaries of simulation - for science.

“There is a definite objective to simulate the world’s climate, in high fidelity, in order to simulate all the cloud layers. Imagine the Earth, gridded up in 2km grids and 3D too,” Buck told DCD. “It’s one of the things that they’re looking for, for exascale.”

Huang said: “We’re going to go build an exascale computer, and all of these simulation times will be compressed from months down to one day. But what’s going to happen at the same time is that we’re going to increase the [complexity of the] simulation model by a factor of 100, and we’re back to three months, and we’re going to find a way to build a 10 exaflops computer. And then the size of science is going to grow again.”

It is this train of thought, of ever more complex simulations on ever more powerful hardware, that has led some to propose the ‘simulation hypothesis’ - that we are all living in a Matrix-like artificial simulation, perhaps within a data center.

We asked Huang about his views on the hypothesis: “The logic is not silly in the sense that how do we really know, how do we really know? Equally not silly is that we’re really machines, we’re just molecular, biological machines, right?

“How do we know that we’re not really a simulation ourselves? At some level you can’t prove it. And so, it’s not any deeper than that. But I think the deeper point is: Who gives a crap?”

This article appeared in the April/May issue of DCD Magazine. Subscribe to the digital and print editions here: