When the eyes of the world began to look at the power consumption of data centers, operators realized that they needed to discover new ways to save energy. Vendors started re-engineering the designs of their hardware to find ways to make them more energy efficient and minimize overall power consumption.

One of the more high-profile efforts was to make the low energy chipset designed by ARM a contender for the role of data center CPU (see fact file). The approach taken by a number of vendors was to build a massively scalable system using hundreds, if not thousands of ARM cores.

HP’s Project Moonshot was the best known of these efforts, and AMD got into the game by purchasing an existing ARM microserver vendor, SeaMicro - a business it closed down in 2015.

While this was going on, AMD had an effort to build its own low-energy chips based on ARM cores, but this suffered delays and when the Opteron A1100 series was released in January 2016, it was almost a year late.

A new approach

While products suffered delays, the underlying market dynamic also changed. The low-power ARM-based server concept depended on deploying large numbers of moderate capability CPUs to support specific applications or classes of applications that didn’t require the power of a major database application.

But this fell by the wayside as a different idea emerged: as virtual machines became a data center standard, previously wasted CPU cycles could be used to support additional virtual machines.

ARM CPUs still have their reputation for handling limited applications and moderate requirements in exchange for lower cost (both capex and opex), but they seem to have lost their place in the data center when considered for general IT computing loads.

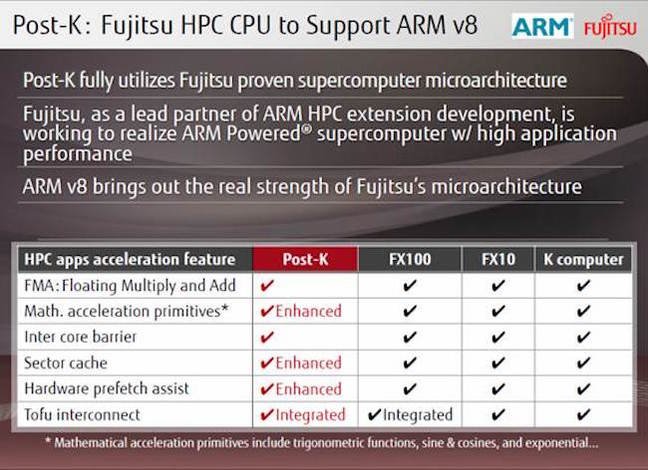

The perception of the ARM processor as suitable only for mobile devices and other implementations where power consumption was paramount was shaken in 2016 when Fujitsu, which is contracted to build Riken, Japan’s next generation supercomputer, announced its Post-K Flagship 2020 project; a 1,000 petaflop computing monster that would be powered by ARM CPUs. This would replace Japan’s K Computer, currently the world’s 5th fastest supercomputer. It would also be 8-10 times faster than today’s top ranked supercomputer, China’s Sunway TaihuLight, representing a significant move towards exascale (a billion billion calculations per second) computing.

The current K Computer is built around SPARC64 VIIIfx CPUs, developed by Sun Microsystems and now owned by Oracle, but Fujitsu has said it believes it can have more control over custom chip manufacturing with the selection of ARMv8 designs than would have been possible by either staying with SPARC or moving to Intel. ARM also has a much more significant ecosystem than SPARC Linux, so the combination of more support in software and more control over the hardware (apparently at the urging of Riken) made ARMv8 the best alternative for the Flagship project, Fujitsu says.

In August 2016, the ARMv8 blueprint added scalable vector extensions to the core architecture. This SIMD (single instruction, multiple data) technology will enable supercomputers to address large data arrays with up to 2048 bit vectors (compared to 512 bit in the current ARM Neon SIMD architecture). Implementation is necessary to make the CPU suitable for this next generation Fujitsu supercomputer and other potential exascale projects. Fujitsu is the lead partner with ARM in HPC extension development.

Ideally, Fujitsu hopes to allow Riken to simply recompile scientific applications and enable auto-vectorization to take advantage of the SVE extensions. If this isn’t the case, significant pieces of code would need to be reworked, delaying the implementation of the Flagship 2020 supercomputer operations.

The types of data manipulation being considered for supercomputer operations, such as predicting global climate change or folding protein chains, are typically not found in the business data center, but routine business operations are manipulating ever larger data sets. Big data continues to accumulate information on everything from customer behavior to product feature sets; and that information is used to continuously model business potential.

Information is sliced, diced, and manipulated in ever-changing ways as businesses look for a competitive edge and the companies at the forefront of data acquisition, such as Google, Facebook, Amazon, and Microsoft, continue to investigate new ways to make use of that data.

Working its way back in

This could be where ARM works its way back into the data center on a significant scale. Large data center operators are faced with the increasing power demands of their business users and their applications and if ARM-based high performance computing can simply meet the output offered by x86 hardware and still deliver significant power savings, this will draw the attention of hyperscale players. For companies that run thousands of servers, a few percentage points of power use represent millions of dollars in operational expenses.

Keep in mind that the existing K supercomputer features over 700,000 cores (and uses 12 MW of power), while the leading Chinese supercomputer manages to link together more than 10,000,000 cores to achieve its dominant position. Power savings at that level need only be a small amount per core to result in significant overall savings. The target set for the post-K computer is to increase power demand four- or five-fold over its predecessor, while increasing performance 100-fold.

Curiously enough, this would bring ARM into the data center in almost the exact opposite position that was originally envisioned for it. Rather than providing a cheaper operational alternative for low priority workloads, ARM-based systems could emerge as the superheroes of data center performance, managing the demanding workloads that justify the very existence of the data center.

But making these changes to the underlying ARM architecture and designing world-class supercomputers is no easy task. During 2016, Fujitsu announced the Flagship 2020 Post-K computer could be delayed as much as two years. The delay is being attributed to issues with the development of the semiconductors.

Dr. Yutaka Ishikawa, project leader for the development of the Post K supercomputer, has been quoted as saying: “We face a number of technical challenges

in creating the new system. In its development, we aim for a system that is highly productive and reliable, and which maintains a balance between performance and power consumption. To achieve this, we must develop both hardware and software that enable high application performance with low power consumption.”

No one has ever said that the cutting edge would be easy, but with SoftBank’s $32 billion dollar purchase of ARM, it would seem that not only Fujitsu is betting on it for the future of a broad range of computing products.

A version of this article appeared in the December/January issue of DatacenterDynamics magazine.