Designing and building highly resilient data centers is an expensive business, but then so is downtime. Figures from data and IT security research organization Ponemon Institute show that the cost of the average outage increased by 38 percent from around $500,000 in 2010 to more than $700,000 in 2016.

However, the researcher’s ‘Cost of Data Center Outages’ report revealed that the maximum cost for an outage could be as much as $2.4 million. Some recent outages - at an airline industry’s facility for example - may have been even higher, with estimates running into the tens of millions.

Money matters

Given the direct financial impact, as well as after effects such as reputational damage, there is usually a thorough investigation when an outage does occur. While some companies may opt to keep the matter behind closed doors, others prefer to call in specialist outsiders to manage the process. The benefit of bringing in a third-party is that the internal facilities or IT team may not have the resources or skills to conduct an in-depth investigation while also working to restore service after an outage.

There is also the risk in some cases that internal staff may try to deflect blame or obfuscate the causes - especially if human error is a factor. Without a rigorous investigation and reporting procedures, the likelihood of a repeat event inevitably increases.

When there is a good indication that an outage was primarily facility rather than IT related, one option is to call in a mechanical and electrical (M&E) specific forensic engineering services team. For example, Schneider Electric, as with some other large data center technology suppliers, can dispatch a team to a site if the failure is believed to involve its equipment.

“If there is a catastrophic failure then we have a process dedicated to deal with that,” says Steve Carlini, senior director of data center offer management, Schneider Electric. “A bit like car makers that go and look at accidents right after they happen, we have teams that are dispatched to the site in the event of an incident and will start digging into what is going on.”

UK data center engineering services specialist Future-tech provides a similar service but its investigation usually has a wider scope. “We are called into sites where there has been an outage to establish the root-cause and, in many cases, produce a resolution to harden the site, or affected infrastructure, against a similar event,” says James Wilman, Future-tech’s CEO.

Both organizations have seen increasing demand for forensic engineering investigations in the recent past. “Over the last 12 months we have completed five or six of those [investigations] in sites ranging between 1MW and 5MW, although one has been considerably larger than that; a couple have also been very high-profile,” says Wilman.

According to Schneider’s Carlini, as data centers have gotten bigger so has the demand for forensic investigations. In particular, there has been a rise in the number of arc-flash (dangerous electrical discharge) incidents, the investigation of which requires specially trained staff and equipment.

“Data centers are getting much, much larger than in the past,” Carlini says. “In a small building with normal size breakers, the facility manager can simply reset a tripped breaker, for example. When you get very large facilities, you have to bring in the certified people with ‘special suits’.”

Although the ultimate source of an outage may be M&E related, the process of determining the root-cause often starts with the IT equipment.

“The team might start with the actual components in the server or element of IT equipment that have failed,” says Wilman. “They will identify what those components are, the reasons why those components have been affected in the way they have, and then go back up the power chain until they find something that could have caused that event to happen.”

There is a wide range of factors that can contribute to, or directly cause, an outage. Issues with the power chain, specifically UPS failures, were the leading cause of data center downtime in 2016, according to the Ponemon report. Human error was the next highest cause, followed by issues with cooling or water systems, weather-related incidents, and generator faults. Finally, IT equipment failures accounted for just four percent of incidents, according to the research.

It’s more than one thing

However, in practice it can be a challenge to separate out one specific cause. The reality is that outages may be down to a cascade effect of various issues.

“For example, a piece of aging equipment develops a fault but this in itself doesn’t cause an outage as the system has redundancy,” says Wilman.

“Then an operative attempts to isolate the faulty equipment but, due to out of date information or a lack of training/knowledge, incorrectly carries out the bypass procedure and this causes further issues with the result of dropping the critical load.”

The outage may also involve multiple pieces of equipment and getting to the ultimate source of the problem may require input from all of the technology suppliers concerned. “Sometimes when the issue is not clear, the customer will ask for representatives from all companies with equipment involved to get together and figure it out,” says Carlini. In this scenario he believes that monitoring tools - such as data center infrastructure management (DCIM) - can be helpful.

“As you can imagine, these meetings can get unwieldy, given the number of people involved. That’s why it is important to have monitoring systems in place so that there is a data trail.”

Having identified the source, or sources, of the outage, the next step is to record the findings in a detailed report along with recommendations on how to avoid a future incident. The whole process can take days, or a matter or weeks, depending on the complexity of the facility but also the timeline set by the owner or operator. The resulting report is often a highly sensitive document especially if human error is to blame. “On occasion the atmosphere can feel a little hostile as staff think we are there to catch them out or find a scapegoat,” says Wilman.

“This is not the case though, as the only agenda is to identify the root-cause and help prevent further outages from happening.”

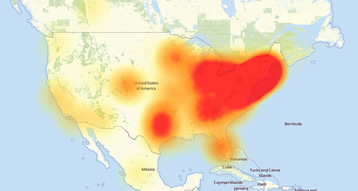

But while apportioning blame may not be a priority in most incidents, it becomes paramount in the case of a deliberate attack. According to the Ponemon report, there was a dramatic increase in the number of deliberate security breaches - including distributed denial of service (DDoS) attacks - from just 2 percent in 2010 to 22 percent in 2016. In this scenario, meticulously tracing the root-cause of an incident could help protect against future attacks but also aid law enforcement agencies in identifying the perpetrators.

As long as there is downtime there will continue to be a strong need for forensic engineering services. However, the technology landscape continues to shift. The way that data centers are monitored and managed is evolving. Use of DCIM tools - while not as all pervasive as some suppliers hoped - is increasing, which in the long-term should make it easier for operators to self-diagnose outages. Equipment OEMs are also embedding more intelligence and software into power and cooling equipment to enable proactive and preventative maintenance, which should also help reduce the likelihood of equipment failure.

Fundamental approaches to resiliency are also shifting with more operators - led by large cloud operators - investing in so-called ‘distributed resiliency’ where software and networks take a bigger role in ensuring availability rather than redundant M&E equipment. The performance of an individual UPS, generator or perhaps even an entire site, becomes less critical in this scenario.

However, the counterpoint to this trend is if a service outage were to occur in such a highly distributed system then tracking the ultimate cause would require some serious detective work.

This article appeared in the October/November issue of DCD Magazine. Subscribe to the digital and paper editions here.