Cloud-based data centers have evolved rapidly to support virtualized services and applications, and this has created significant challenges for data center security architectures. Traditional data center security strategies have typically relied on perimeter-based security appliances, while assuming that the interior of the data center could be trusted.

But in today’s multi-tenant environments, tenants and applications cannot be trusted, and there is a significant potential for threat injection inside the data center. To properly protect tenants and application workloads in this “Zero-Trust” environment, security functions must be distributed on a per application or VM basis and associated with the tenants and workloads directly with fine-grained control. (Figure 1).

Securing the workloads, not just the network

Traditional security approaches have involved protecting resources (specific ports, VLANs, and network addresses) at the network level. This could typically be accomplished with simple static rules, but in today’s multi-tenant cloud environments, security policies must be much more tenant- and application-specific, resulting in more complex and fine-grained policy rules. These zero-trust stateful rules may include virtual overlay network identifiers and application-related information, for example.

The trend with large- and hyper-scale data centers is to simplify the physical network and widely distribute networking and security tasks amongst the thousands of endpoints that exist within the network. This strategy enables growth through scale-out, increases flexibility, and makes the network environment more agile. The process of taking the functions of dedicated network security appliances and virtualizing them through software, and distributing them widely across the entire server infrastructure is the core definition of zero-trust stateful security. Rather than forcing all traffic through a centralized location in the network, such as a dedicated security appliance, this technique allows security to be stitched into the virtual fabric of the network by attaching security functions to every VM instance inside of the network without losing the ability to tune policies to a specific VM, group of VMs, tenant, application, or network.

The need for statefulness

When talking about workload- or application-level security, it’s important to be able to apply stateful filtering on a per-connection basis. The key here is to consider the state of a flow in combination with matching on the header fields to determine if a packet should be permitted or denied. Stateful inspection requires every connection passing through the firewall to be tracked so the state information can be used for policy enforcement. A typical implementation would use a connection-tracking table to report flows as NEW, ESTABLISHED, RELATED, or INVALID to the firewall. The policy applied to the system then dictates how packets are evaluated within the stateful firewall. This type of bidirectional connection tracking is critical to adequately protecting many server applications.

Agility and elasticity are key

Cloud networking enables us to quickly and easily migrate workloads across servers and data centers to maximize resource utilization, provide resiliency, and support rapid scale-out. This means that security policies that are associated with tenant and application workloads must also be able to migrate seamlessly along with them. In modern SDN data center environments, this implies tight integration of security policy distribution within the control and orchestration infrastructure, such as OpenStack. Figure 2 shows a typical OpenStack deployment with Security Groups that are programmed into the server networking datapath to provide agile security control while working in concert with the overall orchestration and management infrastructure.

The evolution of security support with OpenStack

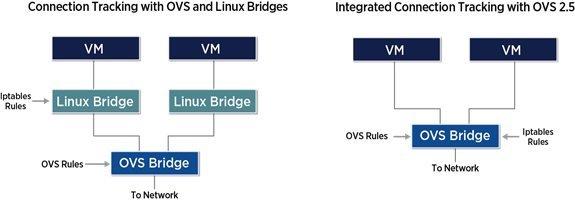

OpenStack has a feature called Security Groups that provides a mechanism to implement stateful security in the form of connection tracking on a per-VM basis. The connection tracking function itself, also referred to as Conntrack, is implemented in the Linux Netfilter IPTables module. But since most cloud data center deployments use Open vSwitch (OVS) for network overlay processing and other tasks, this became a very cumbersome implementation, because a separate Linux Ethernet bridge needed to be instantiated and connected to the OVS bridge in order to get the stateful connection tracking function. In addition to the increased configuration complexity, the extra processing steps increased the CPU load, resulting in poor performance. More recently, the connection tracking functionality has been integrated into OVS 2.5, eliminating the need for the extra Linux Ethernet bridge and resulting in some improvement, as shown in Figure 3.

But even with the improvement by implementing Conntrack directly in OVS, the Conntrack function itself is still quite CPU-intensive and can degrade the server networking datapath performance significantly when enabled. For this reason, Conntrack is an excellent candidate for offload, together with the virtual switching function, to an acceleration device such as an intelligent server adapter (ISA).

Performance and resource impacts of stateful security on servers

In modern data centers, the goal is to achieve efficient scalability by leveraging the computing power of many thousands of servers and breaking data center tasks up into smaller, more manageable workloads that can be flexibly allocated to available resources. This means that a data center’s service capacity will grow at the same pace (linearly) as the server footprint. An often-overlooked assumption with this technique is that most, if not all, of the CPU resources in a given server are available to run application workloads. But deploying security on servers creates a scenario where the security services are competing for the same server resources as the applications, which is counter-productive to the revenue goals of the data center. Some of the specific problems that result from server-based virtual switching with security processing are as follows:

Overlay Network Support and Tunneling: Network tunneling is an intense process from a compute perspective, requiring lookups on several headers (inner and outer) as well as inserting new headers in the case of encapsulation. Network tunneling implemented on the server CPU becomes a significant bottleneck at high network speeds due to lack of compute parallelism. This is because the packet arrival time exceeds the packet processing time on the server, which cannot support the core or thread capacity to sufficiently parallelize these network tasks to keep up with arrival rate.

Access Control with Tens of Thousands of Rules: Using a host-based ACL or filtering table for access control exposes a major weakness when using a server CPU for networking. Server architectures rely heavily on various levels of CPU caches to achieve their greatest possible performance. The required ACL rule capacity to support tens to hundreds of VMs per server can lead to lookup table sizes that are much larger than the cache sizes in typical servers, leading to a state of continuous cache thrash, which significantly reduces performance.

Stateful Tracking of Millions of Sessions: Keeping state on millions of flows exacerbates the cache-thrash problem even further. Per-connection state tracking is an additional data structure that must be accessed with every packet received, putting greater pressure on caching and memory subsystems. Server CPUs rely on high cache hit rates for networking performance, but stateful firewalling significantly reduces, or eliminates, any effectiveness of an x86 cache.

Advanced Security Services: Lastly, some administrators may require security spanning the entire stack (up to L7) and therefore require application level inspection. These services may include intrusion detection (IDS), intrusion prevention (IPS), anti-virus (AV), data loss prevention (DLP), malware detection, application firewall, user identification, and other services. In a virtual environment, these services are deployed within VMs and traffic is plumbed into and out of the service using a host-based vSwitch further exacerbating the load for networking and security on the CPU.

Using Intelligent Server Adapters to accelerate Zero-Trust stateful security with OpenStack

When considering security posture and data center scale-out, Zero-Trust stateful security is an effective strategy in theory. But as we have noted, there are severe penalties on each server in the form of bottlenecks and increased resource consumption, leaving few resources left for revenue-generating VMs, and erasing many of the benefits of cloud-scale networking.

Intelligent server adapters (ISAs) running firewall software are designed to accelerate server-based networking in environments requiring stateful security. ISAs fully offload the overlay tunneling and match/action processing of Open vSwitch (OVS), as well as stateful security processing via Conntrack, while supporting extremely high rule and flow counts.

There is a two-fold benefit to the server when offloading the security workload to ISAs:

- The networking and security I/O bottleneck is eliminated, allowing VMs or containers to receive as much data as they can process.

- Significant CPU savings can be realized by taking the OVS match-action processing as well as stateful security workloads and executing them on the ISA instead of server CPU cores by placing networking and security workloads on ISA and application workloads on x86 CPUs.

By offloading the vSwitch and its associated security policies, up to 80% of the compute node CPU resources can be reclaimed and used for VM deployments while eliminating the networking bottlenecks. This “scale-up” of servers effectively doubles or triples VM capacity, which has an even greater effect on revenue per server generated.

The ISA is a drop-in accelerator for OVS with seamless integration with existing network tools and controllers and orchestration software such as OpenStack. Use cases for the accelerated firewall software include public and private cloud compute nodes, telco and service provider NFV, and enterprise data centers. In these use cases, it is common to have a large number of network overlays and/or security policies that are enforced on the server with potentially several thousands of policies per VM. ISAs provide the ability to support very high flow and security policy capacities without degradation in performance.

Benchmarks

As noted, native OVS and Conntrack running on servers without acceleration struggle with packet processing. This ties up valuable server CPU resources and creates a bottleneck that starves applications. Netronome benchmarks have shown that Netronome’s Agilio ISAs can reclaim the server CPU resources previously dedicated to OVS and security returning 50% of the overall compute node CPU capacity, while at the same time delivering up to 4X or more of the packet data throughput to application workloads.

The following illustrates benchmarking comparisons of throughput and server CPU utilization across various packet sizes to compare the performance of a native software-based implementation of OVS 2.5 with Conntrack security enabled against an ISA-based implementation for 10K security policies. The server hardware was comprised of a dual socket XEON totaling 24 physical CPU cores. The results of the benchmarking are shown in the Figure 4.

In terms of throughput improvement we can see that, at an average packet size of 512 bytes, a purely software-based OVS with Conntrack solution can only process about 6Gb/s. With the ISA acceleration, this number reaches up to 24Gb/s, resulting in a 4X improvement in packet delivery to target workloads. At the same time, we can see that about 12 of the 24 server CPU cores were completely freed up from OVS and security processing, and are now available to run more applications.

Conclusion

ISAs have proven to be the optimal solution for offloading stateful security with OpenStack. By offloading isolation, access control and stateful firewalling via Conntrack, ISAs prove to eliminate networking bottlenecks in compute nodes offering up to 4X the performance for Conntrack at high rule and flow capacities while simultaneously restoring up to 50% of the CPU cycles, which can be repurposed for VM/container deployments and applications.

Daniel Proch is vice president of Solutions Architecture at Netronome.