Data center operators are no strangers to liquid cooling. They’ve been plumbing water-cooled equipment into their CRAC and CRAH chillers to try to keep up with the waste heat generated by IT loads for years. But as IT load density has continued to increase, and servers have gotten more powerful – with faster CPUs and more memory – it is an ongoing battle for cooling capacity to keep up with the data center’s ability to generate heat.

Cutting-edge data centers can take three paths regarding liquids in their facilities: they can try to remove the water-cooling environment, making as much possible use of free air and related cooling technologies that depend on environmental factors to maintain temperatures in the data center; they can look at alternative ways to supply and cool the water they use to keep their facilities cool with technologies such as groundwater and seawater cooling; and they can fully embrace the liquid world and opt for technologies such as direct-to-chip liquid cooling and immersive cooling.

Cooling for components

Asetek is a major driving force behind using liquid for primary cooling of specific server components. It gained extensive experience in building cooling systems for high-end workstations and gaming systems, and is now applying this to data centers. The technology has been used reliably for years in standalone servers and workstations, and even in some of the fastest supercomputers in the world, but Asetek is looking to make the technology easily accessible to data center operators on the scale that today’s high-efficiency data center requires.

The Asetek RackCDU D2C technology provides liquid-cooled heat sinks for CPUs, GPUs and memory controllers in the servers in your data center. The technology uses a liquid-to-liquid heat exchanger system; there is never any actual contact between the liquid being pumped through the facility and the liquid circulating within the servers.

The RackCDU is a zero-U 10.5 rack extension that contains space for three additional PDUs, the cooling distribution unit, and the connections for the direct-to-chip cooling units that are a direct replacement for the standard heat sinks found in servers. Those cooling units contain the fluid pump and the cold plate that rests on the CPU in the same way a standard heat sink does. The water being pumped to the CDU can be as warm as 105°F (40°C) and can come from dry coolers or cooling towers. As the heated water is being circulated away from the servers, it can be used to provide heat for the facility.

Fitting new heatsinks

Existing racks and servers can be configured with the Asetek equipment. The coolers use dripless quick connects that are external to the physical server to connect to the overall cooling system. Asetek’s website shows video of Cisco rack servers having their existing server heat sinks replaced with the company’s CPU/GPU cooling equipment to show the minimal amount of time such configuration would take. Of course, multiplying this example by dozens or hundreds of servers shows that the upgrade is not a trivial task. Designing this technology from the start and making use of the Asetek racks would simplify the process.

So far the primary adopters of this technology have been specialized projects in high-performance computing and government agencies, and most are still doing side-by-side comparison programs to determine the effectiveness of this cooling solution. Asetek claims the RackCDU D2C system can reduce data center cooling costs by more than 50 percent while potentially allowing data center IT load density to quintuple. Overall efficiency improvements come from improved cooling, higher densities, waste heat reuse and reduced cost to provide cooled air/water/fluids.

Circulated coolant

But for those for whom simply cooling their processors and memory is just a start, we move on to immersion cooling. With this technology, the entire server (or storage blade) is submerged in a dielectric fluid that is circulated to transfer the heat from the IT equipment, to the fluid, to an external cooler, which can be as simple as a basic radiator. While the most basic dielectric fluid used with this technology is mineral oil, 3M has a range of engineered fluids, marketed under the name Novec, specifically for this application.

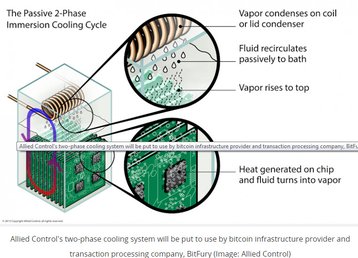

The best-known vendors in the total immersion business are Green Revolution Cooling (GRC) and Allied Control, with a start-up called LiquidCool Solutions making inroads, and Iceotope establishing itself in the UK. Hong Kong-based Allied Control was purchased earlier this year by BitCoin mining infrastructure provider BitFury in an attempt to provide a more energy-efficient infrastructure and to move the company into the supercomputer cooling business.

LiquidCool and Iceotope take the approach of cooling each blade separately using dielectric within a sealed case. In the LiquidCool system, the coolant circulates outside the rack, while the Iceotope system seals each blade separately and removes the heat by a secondary water-cooling circuit.

Immersion in a tank

Meanwhile, GRC and Allied Control both immerse the IT equipment into a tank of fluid – although, again, their approaches are different. With Allied Control’s passive 2-Phase immersion-cooling cycle, the coil or condenser uses an evaporative scheme to circulate the fluid, allowing the heat from the server chips to be a part of the process. With the GRC total-immersion system, the dielectric fluid is mechanically circulated. Allied also uses a modular system available in their custom Data Tank and in a flat rack.

The simpler GRC system uses a horizontal rack system. It uses normal-size racks but they are mounted horizontally, so the individual rack units sit vertically within the fluid. This allows for the use of standard servers but requires additional floor space when compared with vertically mounted rack units.

With the GRC CarnotJet the total immersion of the server rack allows the entire body of liquid to act as a heat sink. The liquid is pumped through a heat exchanger filter system, which uses external water cooling to provide cooling to the GreenDEF dielectric fluid used in the CarnotJet rack. GRC also uses a proprietary system to encapsulate hard drives, allowing them to be fully submerged in the cooling media.

Both technologies claim to reduce cooling power consumption by 90 percent or greater, and also reduce overall server energy consumption. LiquidCool uses the model of the total power needed to achieve 50kW of compute power. Using this metric, the claim is that the immersion solution requires only 320kW compared with 50kW for standard cooling technologies. In this model, just removing the power requirements for the server fans reduces power requirements by 83kW.

Supercomputers only?

The earliest adopters of liquid-immersion technology have been in high-performance computing, where heat generation is a major issue. And while the GRC solution can be used with existing servers appropriately modified for immersion, all these solutions work best when applied from the ground up, as a fundamental part of a data center or data center hall design.

Liquid cooling is not for everyone, but it could easily find a home in special project needs on a large scale. Selecting these technologies to cool an entire data center is likely too big a step for almost any data center operator, but applying these technologies in situations where they can be most effective should be on any efficient operator’s list of potential point solutions.