Not a week goes by without a new data center opening somewhere, or a large hosting provider expanding its existing facilities. Recent research from iXConsulting backs up that trend. Its 14th Data center Survey polled companies each controlling around 25 million square feet of data center space in Europe, including owners, operators, developers, investors, consultants, design and build specialists, large corporates, telcos, systems integrators, colocation companies and cloud service providers.

All expressed a desire and intention to build out their current data center footprint, both in-house and through third parties, with 60 percent saying they would increase in-house capacity in 2017 and 38 percent in 2018. Over a third (35 percent) said they would expand their third party hosting capacity by 2019.

More than any other part of the market it is the hyperscale cloud service providers which appear to be currently driving that expansion. Canalys suggests that the big four cloud players on their own - Amazon Web Services (AWS), Google, IBM and Microsoft - represented 55 percent of the cloud infrastructure services market (including IaaS and PaaS) by value in the second quarter of 2017, in total worth US$14bn and growing 47 percent year on year.

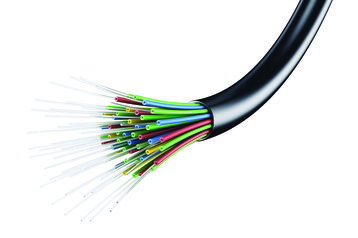

As data centers grow, so do cabling systems

Irrespective of the size of the hosting facilities being owned and maintained, the unrelenting growth in the volume of data and virtualized workloads being stored, processed and transmitted as those data centers expand will put significant strain on the underlying data center infrastructure. And that is especially true for internal networks and underlying cabling systems that face an acute lack of bandwidth and capacity for future expansion with current technology and architectural approaches.

In each individual data center the choice of cabling will depend on a number of different factors beyond just capacity, including compatibility with existing wiring, transmission distances, space restrictions and budget.

Unshielded (UTP) and shielded (STP) twisted pair copper cabling has been widely deployed in data centers over the past 40 years, and many owners and operators will remain reluctant to completely scrap existing investments.

As well as being cheaper to buy, copper cabling has relatively low deployment costs because there is no need to buy additional hardware, and it can be terminated quickly and simply by engineers on site.

Fiber needs additional transceivers to connect to switches, and also requires specialist termination. By contrast, copper cables use the same RJ-45 interfaces, backwards compatible with previous copper cabling specifications which simplifies installation and gradual migration over a longer period of time. Standards for copper cabling have evolved to ensure this continuity (see box: copper standards evolve).

Data center networks that currently rely on a combination of 1Gbps and/or 10Gbps connections at the server, switch and top or rack layers today are likely to see 25/40Gbps as the next logical upgrade. But in order to avoid bottlenecks in the aggregation and backbone layer, they will also need to consider the best approach to boosting capacity elsewhere, and particularly over longer distances which copper cables (even Cat8) are ill equipped to support.

Many data center operators and hosting companies have plans to deploy networks able to support data rates of 100Gbps and beyond in the aggregation and core layers, for example.

That capacity will have to cope with the internal data transmission requirements created by hundreds of thousands, or millions of VMs, expected to run on data center servers in 2018/2019, and most are actively seeking solutions that will lay the basis for migration to 400Gbps in the future.

Where that sort of bandwidth over longer cable runs is required, the only realistic choice is fiber - either multimode fiber (MMF) or single mode fiber (SMF). MMF is cheaper and allows lower bandwidths and shorter cable runs. It was was first deployed in telecommunications networks in the early 1980s and quickly advanced into enterprise local and wide area (LAN/WAN) networks, storage area networks (SANs) and backbone links within server farms and data centers that required more capacity than copper cabling could support.

Meanwhile, telecoms networks moved on to single mode fiber, which is more expensive and allows greater throughput and longer distances. Most in-building fiber is still multi-mode, and the network industry has created a series of developments to the fiber standards, in order to maximize the data capacity of those installations (see box: making multi-mode do more).

The road to single mode fiber

As data centers have continued to expand however, the distance limitations of current MMF specifications have proved restrictive for some companies. This is particularly true for hyperscale cloud service providers and those storing massive volumes of data like Facebook, Microsoft and Google which have constructed large campus facilities spanning multiple kilometers. Social media giant Facebook, for example, runs several large data centers across the globe, each of which links hundreds of thousands of servers together in a single virtual fabric spanning one site. The same is true for Microsoft, Google and other cloud service providers for whom east to west network traffic (i.e. between different servers in the same data center) requirements are particularly high.

What these companies ideally wanted was single-mode fiber in a form that was compatible with the needs and budget of data centers: a 100Gbps fiber cabling specification with a single mode interface that was cost competitive with existing multi-mode alternatives, has minimal fiber optic signal loss and supports transmission distances of between 500m and 2km. Four possible specifications were created by different groups of network vendors. Facebook backed the 100G specification from the CWDM4-MSA, which was submitted to the Open Compute Project (OCP) and adopted as part of OCP in 2011.

Facebook shifted to single-mode because it designed and built its own proprietary data center fabric, and was hitting significant limitations with existing cabling solutions. Its engineers calculated that to reach 100m at 100Gbps using standard optical transceivers and multi-mode fiber, it would have to re-cable with OM4 MMF. This was workable inside smaller data centers but gave no flexibility for longer link lengths in larger facilities, and it wasn’t future proof: there was no likelihood of bandwidth upgrades beyond 100Gbps.

Whilst Facebook wanted fiber cabling that would last the lifetime of the data center itself, and support multiple interconnect technology lifecycles, available single-mode transceivers supporting link lengths of over 10km were overkill. They provided unnecessary reach and were too expensive for its purposes.

So Facebook modified the 100G-CWDM4 MSA specification to its own needs for reach and throughput. It also decreased the temperature range, as the data center environment is more controlled than the outdoor or underground environments met by telecoms fiber.

It also set more suitable expectations for service life for cables installed within easy reach of engineers.

The OCP now has almost 200 members including Apple, Intel and Rackspace. Facebook also continues to work with Equinix, Google, Microsoft and Verizon to align efforts around an optical interconnect standard using duplex SMF, and has released the CWDM4-OCP specification which builds on the effort of CWDM4-MSA and is available to download from the OCP website.

The arrival of better multi-mode fiber (OM5 MMF) and the lower-cost single-mode fiber being pushed by Facebook, could change the game significantly, and prompt some large scale providers to go all-fiber within their hosting facilities, especially where they can use their buying power to drive the cost of transceivers down.

Better together

In reality, few data centers are likely to rely exclusively on either copper or fiber cabling – the optimal solution for most will inevitably continue to rely on a mix of the two in different parts of the network infrastructure for the foreseeable future.

The use of fiber media converters adds a degree of flexibility too, interconnecting different cabling formats and extending the reach of copper-based Ethernet equipment over SMF/MMF links spanning much longer distances.

So while future upgrades to the existing Cat6/7 estate will involve Cat8 cabling supporting 25/40Gbps data rates will handle increased capacity requirements over short reach connections at the server, switch and top of rack level for some years to come, data center operators can then aggregate that traffic over much larger capacity MMF/SMF fiber backbones for core interconnect and cross campus links.

This article appeared in the December/January issue of DCD Magazine. Subscribe to the digital and print editions here: